1000 Layer Networks for Self-Supervised RL: Scaling Depth Can Enable New Goal-Reaching Capabilities

Motivation

Reinforcement learning (RL) networks had usually been constructed with very few layers, usually 2-5, according to Stable-baselines3: Reliable reinforcement learning implementations and Cleanrl: High-quality single-file implementations of deep reinforcement learning algorithms. At the same time, most of the successes in Computer Vision or Large Language Models were achieved by scaling models to hundreds of layers - for example, Llama 3 (The Llama 3 herd of models) and Stable Diffusion 3 (Scaling rectified flow transformers for high-resolution image synthesis). Moreover, it was shown in both Natural Language Processing (Beyond the imitation game: Quantifying and extrapolating the capabilities of language models) and Computer Vision (Learning transferable visual models from natural language supervision) domains that certain abilities were acquired only after exceeding a certain scale. That leaves an open question about possible performance gains that could be achieved by similarly scaling the depth of Reinforcement Learning networks.

Going Deeper

Reinforcement learning setup

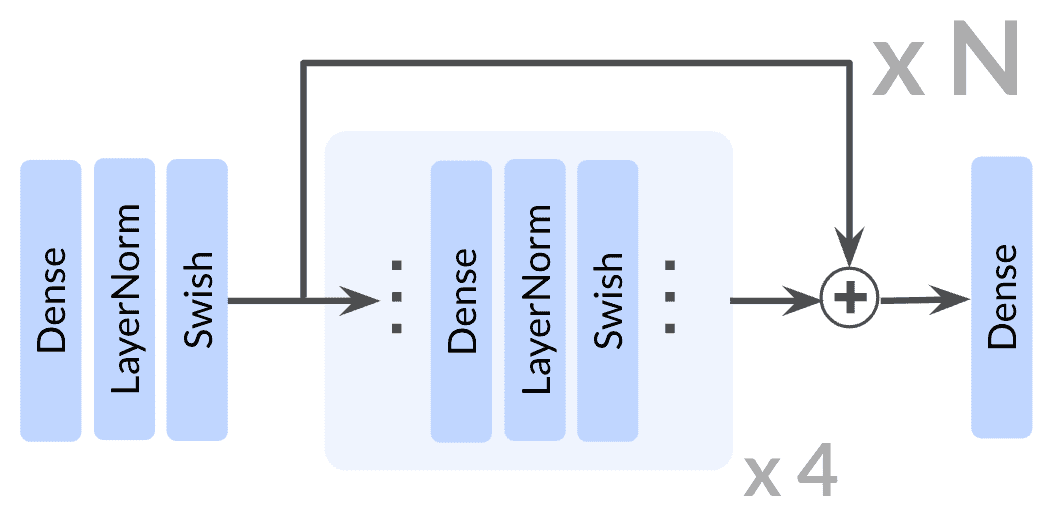

Authors propose using reinforcement learning with a self-supervised paradigm (contrastive RL), which is a key component of almost all recently developed models, allowing systems to explore environments and learn policies with no prior demonstration of “proper” execution of the task nor explicit specification of reward function. Contrastive Reinforcement Learning employs an actor-critic method where the critic’s model is defined in such a way that it returns a state-action pair embedding and a goal embedding. Its loss objective is defined as InfoNCE (Improved Deep Metric Learning With Multi-Class N-Pair Loss Objective), classifying states and actions as belonging or not to the same trajectory that leads to achieving the goal state. Neural networks used in this setup are utilizing ResNet architecture (Deep residual learning for image recognition) with residual connections after every 4 blocks of Dense Layer, Layer Normalization, and Swish Activation. The main difference is that networks considered in this paper are up to 100 times deeper than typical networks used in addressing similar problems in the recent past. To stabilize the training of used models, techniques such as residual connections (Deep residual learning for image recognition), Layer normalization, and Swish activation (Searching for activation functions) were incorporated. Experiments were conducted with online Goal-Conditioned RL environments with a sparse reward setting (r=1 only in goal proximity). Performance was measured as the number of time steps (out of 1000) spent by the agent near the goal, further named as “time at goal”.

Goal-Conditioned Reinforcement Learning. Source: https://arxiv.org/pdf/2201.08299

Data considerations

Reinforcement learning traditionally relies on data that is sparse due to the fact that the rewards are delayed (goal-oriented RL provides a single bit of reward for each trajectory, making the feedback-to-parameters ratio very small. In other words, the reward signal is supplied only upon achieving a rare high-level target like solving a whole puzzle (without intermediate feedback). One of the key features of the Contrastive RL approach indicated in this paper is Hindsight Experience Replay that allows relabeling trajectories with the actual goal achieved by the agent, especially in cases when the goal reward was not reached. That makes unsuccessful trajectories valuable samples for “learning from mistakes”, significantly reducing the sparsity of the learning data.

Results

The results obtained by the authors show that scalability in depth of reinforcement learning brings significant performance gains along with noticeable qualitative improvements in learned policies. This contrasts with other works in the domain, focusing primarily on increasing network width.

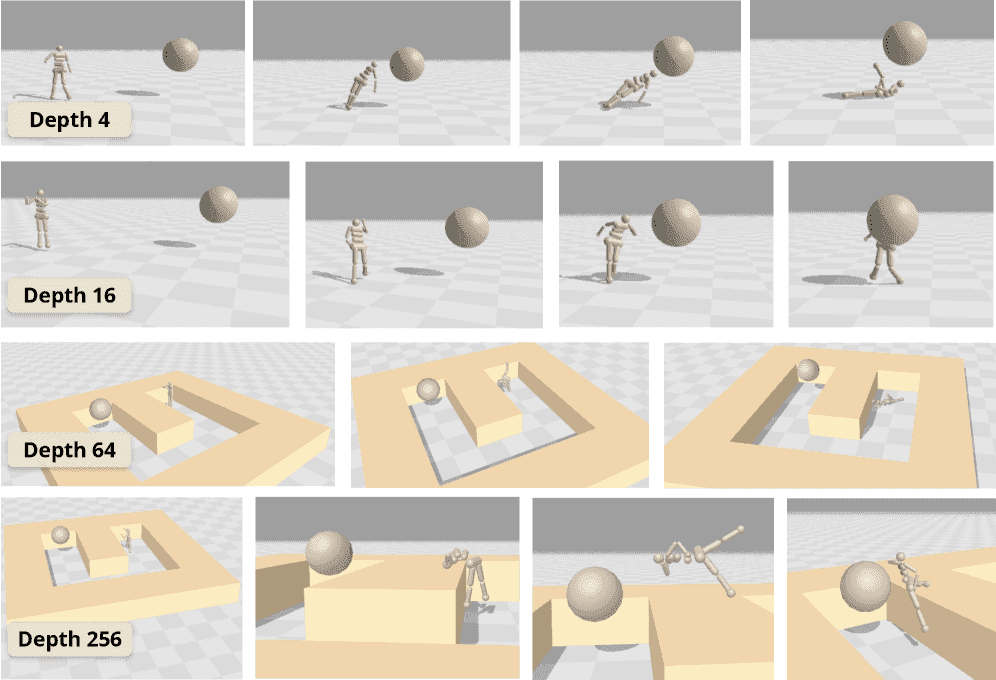

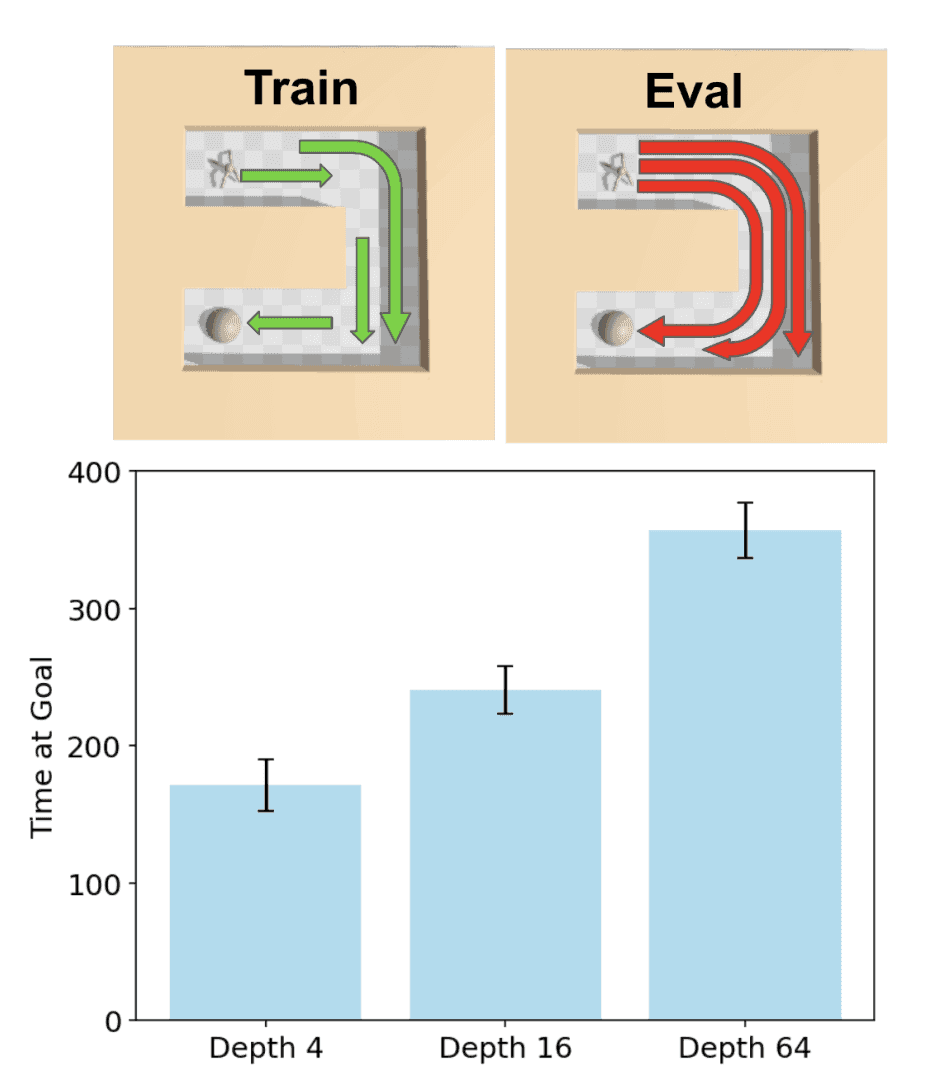

Emerging New Policies

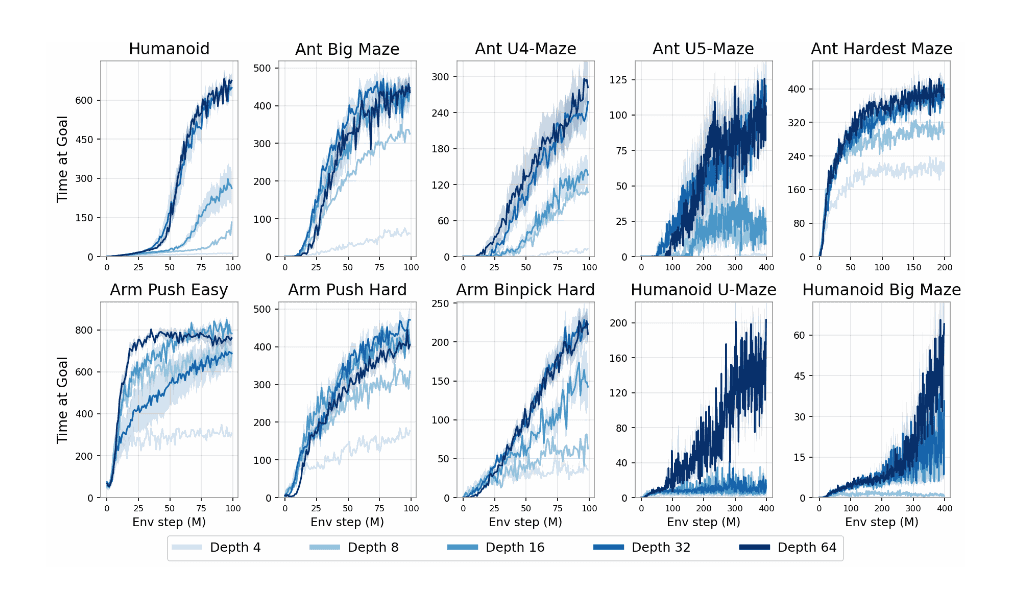

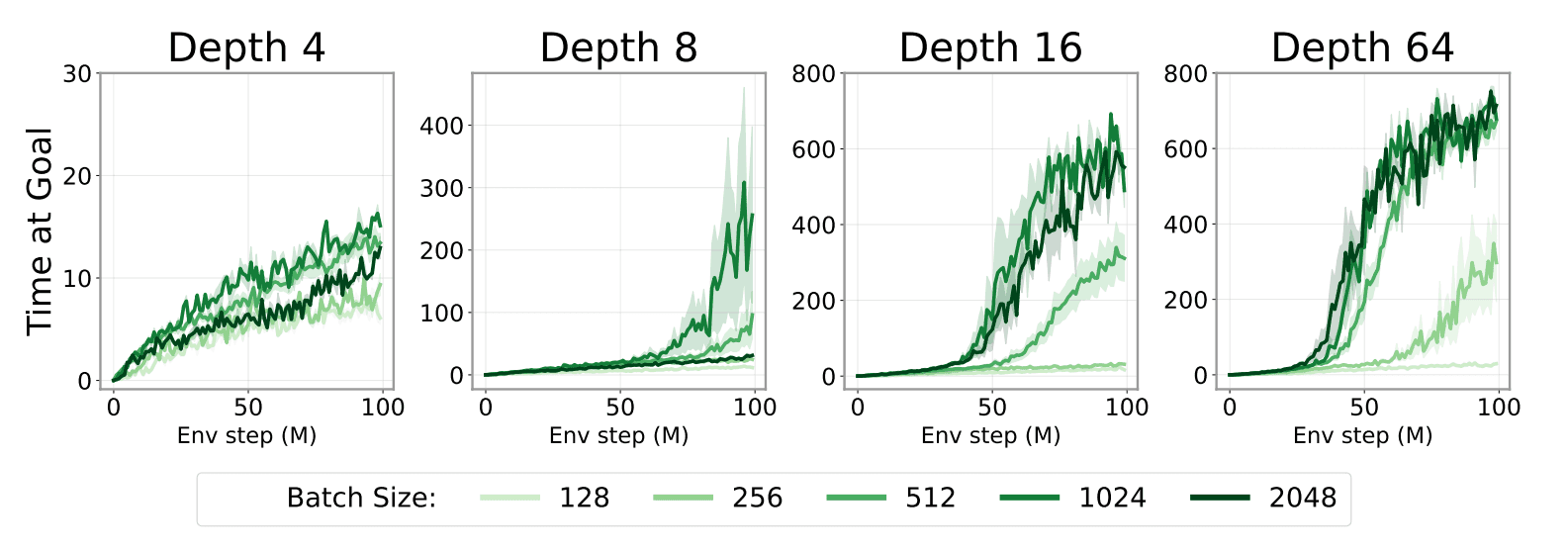

One of the discoveries is that new policies are discovered more steeply than gradually, showing significant progress only after reaching a critical depth threshold. As shown in the figure below, reaching successive depth levels unlocked new behaviours and capabilities of the policy model.

Improved Performance

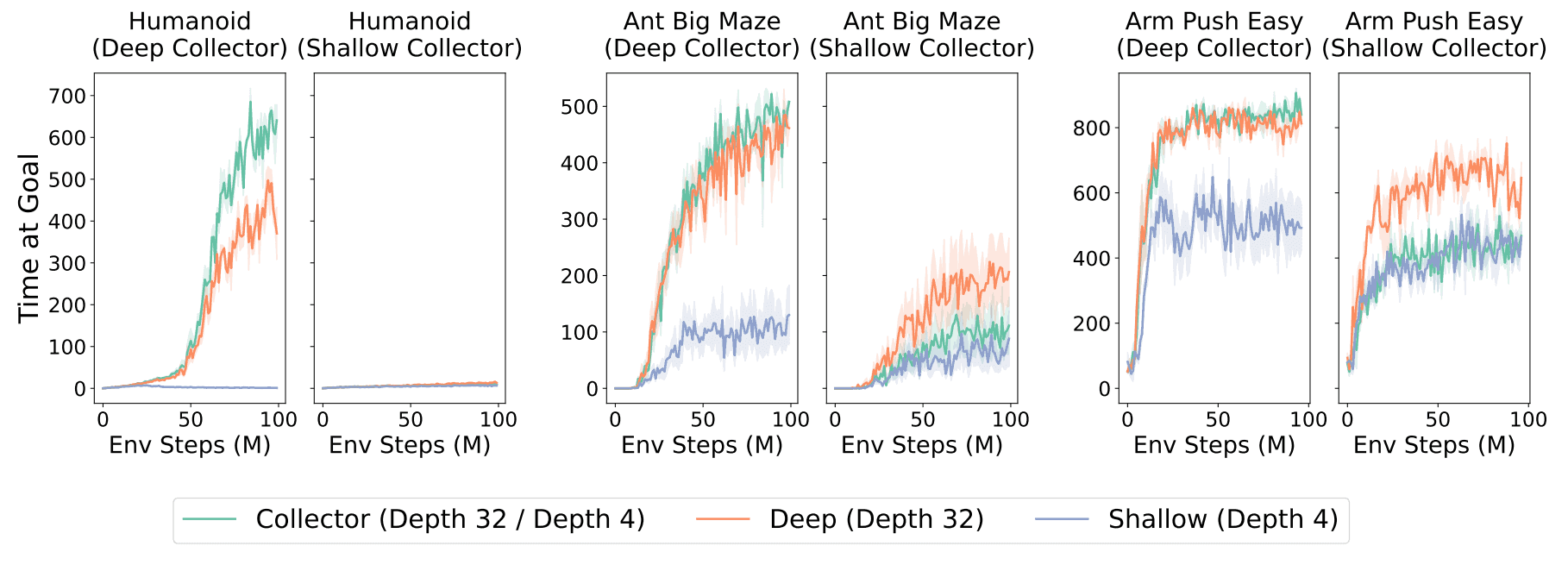

General results from this publication, calculated at a wide range of RL-Benchmark tasks, show consistent improvement ranging from 2 to 50 times better performance. Crucial observation confirmed here is that the improvements appear in “jumps” rather than smoothly.

Source: https://wang-kevin3290.github.io/scaling-crl/static/figures/main_results.gif

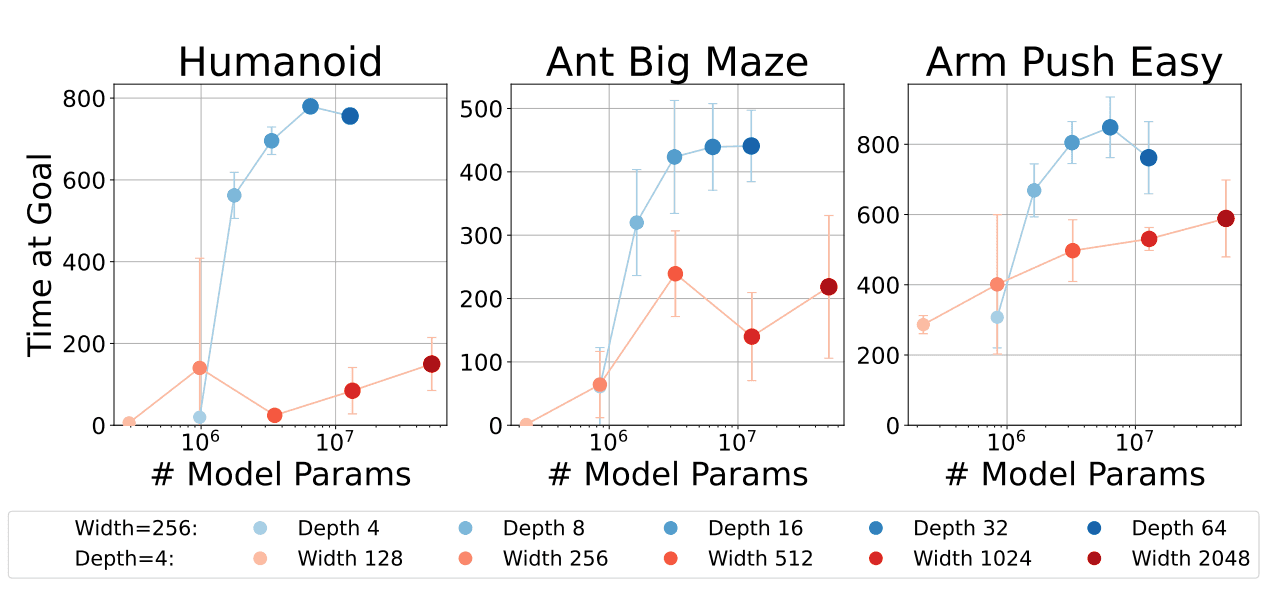

Depth vs. Width

While making networks wider usually also improves performance, the most important question related to scaling the networks is what gives better results, given approximately the same number of parameters - increase in width or increase in depth? It occurs that scaling in depth is significantly more parameter efficient than scaling in width in almost all cases.

Why scaling works

Better contrastive representations

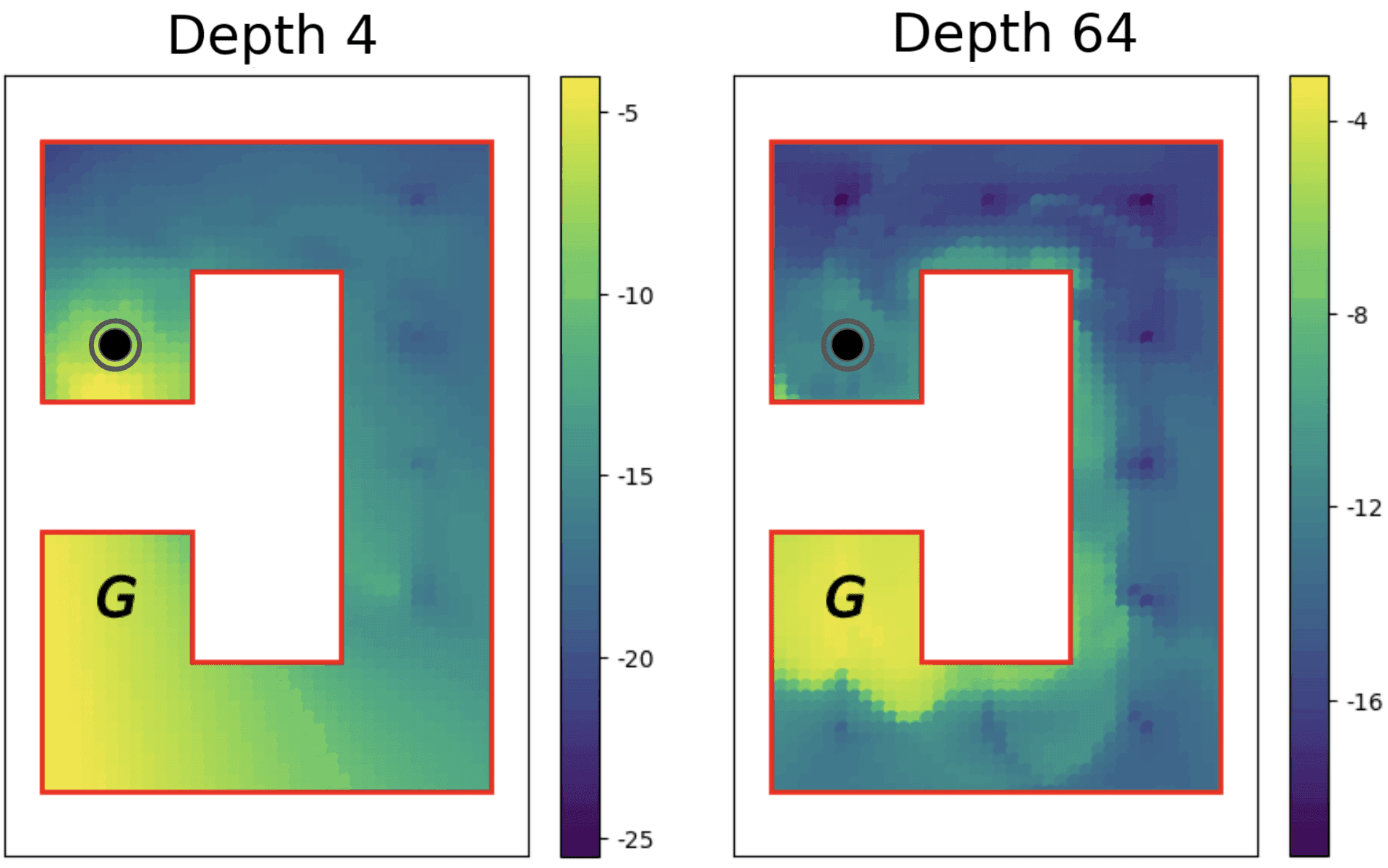

One of the possible explanations for why depth scaling works so well is the observation that depth improves internal vectorized contrastive representations learned by the neural network. This was clearly demonstrated using Q-values for the U4-Maze problem, where a network of depth 4 seems to follow a simple Euclidean distance function to the goal (G), disregarding the maze walls, and a deep network with 64 layers shows much better capturing of the maze topology.

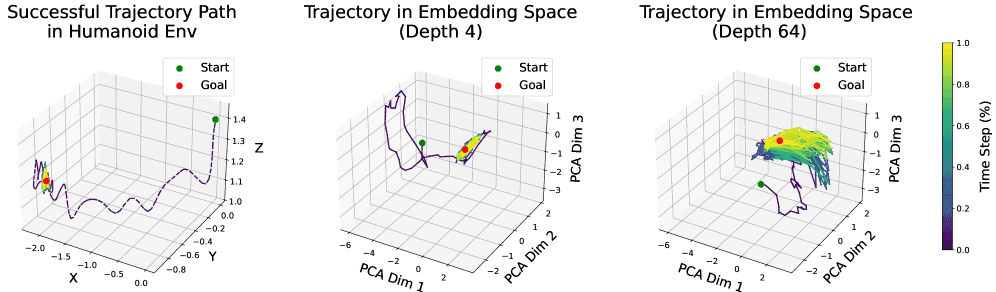

More representational capacity is allocated near the Goal

State-action embeddings in deep networks tend to produce more spread-out representations of meaningful states around the goal than shallow ones that cluster near-goal embeddings more tightly together. It is particularly important in a self-supervised setting where it is desirable to have goal-relevant states to be separated from random ones. This shows that the most important regions for downstream tasks have more effective representations learned with deep networks.

More representational capacity is allocated near the Goal

State-action embeddings in deep networks tend to produce more spread-out representations of meaningful states around the goal than shallow ones that cluster near-goal embeddings more tightly together. It is particularly important in a self-supervised setting where it is desirable to have goal-relevant states to be separated from random ones. This shows that the most important regions for downstream tasks have more effective representations learned with deep networks.

Partial experience stitching

Scaling shows improvements in the generalization of learning policies to tasks unseen during training. It was shown by modifying the training dataset to include only start-goal pairs that are clearly separated from each other in such a way that there is no possibility of composing the evaluation path from smaller paths contained in the training dataset. There were significant improvements with increasing depth of the network, first from 4 to 16 and then from 16 to 64.

Why is it important

It is conceptually a simple change to the Reinforcement Learning domain that was proven to clearly give significant improvements, and at the same time, a big step towards RL models getting their own large models through better simultaneous optimization of modelling and data collection, without relying on reducing problems to natural language or computer vision problems. The publication also provides a wide variety of experiments and ablation studies for disentanglement of success-contributing factors.

Further reading & code: