I've always claimed there's no better way to learn anything than to build something with your own hands... and the second best way is to do a Code Review of someone else's code.

Some time ago at VirtusLab, a sobering thought struck us – our "collection" of starred projects on GitHub had grown to gigantic proportions, bringing little real value to us, or the wider community. We therefore decided to change our approach: introduce some regularity and become chroniclers of these open-source gems. This way, we will understand them better and discover those where we can genuinely help.

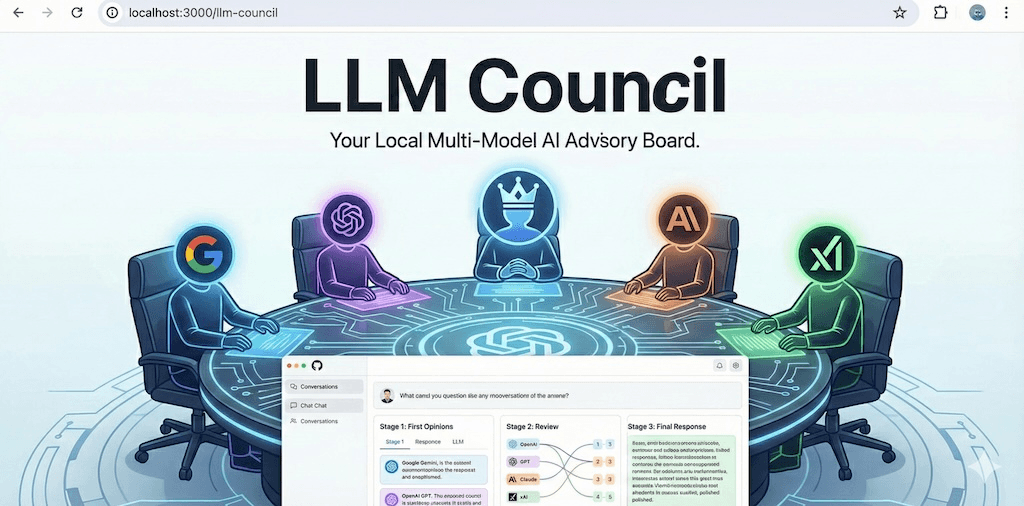

Today, we are taking on a project that made waves on Twitter (or X) and GitHub, not so much because of the complexity of the code, but because of the philosophy behind it, and above all, because of its author, Andrej Karpathy. And in this article, we'll discuss his AI consensus mechanism called llm-council.

Why does this relatively small project deserve such an extensive analysis? Because llm-council is a testing ground for two fundamental shifts in software engineering: the move from relying on single LLM models to an Ensemble Architecture and the shift from the "Clean Code" paradigm to the controversial "Vibe Coding". If you are an engineer trying to understand where the industry is heading in the era of generative AI, this 400-line Python script is probably more important than the latest version of Enterprise Java Beans (as much as I regret saying this as an old Java developer).

Brew some coffee. As always, we are going deep.

Genesys and Philosophy

Usually, to understand the code, we first need to understand the context of its creation. In a traditional Code Review, we look for robustness, test coverage, and adherence to SOLID principles. In the case of llm-council, we must suspend these expectations and look at the project through the lens of a new, emerging methodology.

Andrej Karpathy, former Director of AI at Tesla and a founding member of OpenAI, published this repository with a caveat that sparked a wave of discussion in the developer community (and probably sent shivers of fear down the spines of QA engineers). He described the project as being 99% "vibe coded". It is – as the author writes - a "fun Saturday hack" that is intended to be ephemeral.

"Code is ephemeral now, and libraries are over," writes Karpathy in the README file. "Ask your LLM to change it however you like".

The Problem: Single Model Fallacy

Why did Karpathy build this tool in the first place? Because we live in an age of model fragmentation. We have GPT-5.1 (hypothetically included in the code as future-proofing), Gemini 3.0 Pro from Google, Claude 3.5 Sonnet from Anthropic, Grok from xAI, and open-source models like Llama.

Most developers and users suffer from what can be called Model “Monoculture” Syndrome. We choose one provider, subscribe to their Pro plan, and treat their answers as gospel. This makes sense, both for cost reasons and the cognitive overhead of using a wider range of tools.

However, LLMs are probabilistic engines. They hallucinate… but also they have their "personalities," biases, and specific failure modes.

- Gemini might be concise, but dry and risk-averse.

- Claude might be verbose, literary, but overly cautious.

- GPT might be confident, but factually incorrect on niche topics.

It was something visible way before LLMs came along.

Relying on a single model for complex reasoning is somewhat like having a Board of Directors with one member. There is no debate, no cross-examination, and no error correction mechanism.

Karpathy proposes a solution to the problem by implementing an Ensemble Architecture at the application layer level. He automates the process of:

- Polling: Querying all available "experts".

- Peer Review: Asking them to critique each other.

- Synthesis: Asking a leader to make the final decision.

This somewhat mirrors human decision-making structures (parliaments, scientific reviews, medical councils) and applies them to stochastic AI agents.

Architecture - The Council Protocol

The llm-council architecture is a Distributed Multi-Agent Consensus System, orchestrated by a lightweight central controller. The project avoids the complexity of heavy agent frameworks (such as LangChain, CrewAI, or AutoGen) in favor of pure data flow logic.

The flow consists of three distinct stages:

| Phase | Name | Architectural Role | Key Mechanism | Business/Logical Goal |

| Stage 1 | First Opinions | Generation / Divergence | Fan-Out, Parallelism (asyncio) | Maximizing entropy and diversity of perspectives. |

| Stage 2 | Peer Review | Evaluation / Convergence | AnonymizationCross-Critique | Eliminating hallucinations and bias. |

| Stage 3 | Final Synthesis | Reduction / Decision | Map-Reduce, Lider (Chairman) | Generating a single, coherent answer for the user. |

Stage 1: Divergence Phase (First Opinions)

In the first phase, the system maximizes entropy and diversity. The user's query is broadcast in parallel to every member of the configured Council.

- Architectural Goal: Mitigate "seed bias". By querying different models (different weights, different training sets, different RLHF methods), we ensure that the solution space is broadly explored. If GPT-4 gets stuck in a local minimum of a logical error, there's a high chance that Claude 3.5 or Gemini will approach the problem from a different angle.

- Technical Implementation: This is a classic "Fan-Out" pattern. The backend triggers asynchronous calls to the OpenRouter API for each model defined in the

COUNCIL_MODELSlist in the configuration file. - OpenRouter as a Facade: This is a key architectural decision. Instead of implementing five different SDKs (OpenAI SDK, Anthropic SDK, Google Vertex SDK, etc.) and worrying about query formatting differences, the project uses OpenRouter as a Unified Interface Facade. This separates the application logic from vendor-specific APIs, allowing Council members to be swapped out via a simple configuration file change without code modification.

- Performance: By using Python's

asyncio, queries are sent in parallel. The duration of this phase is determined by the slowest model($latency = \max(t_1, t_2,..., t_n)$), not the sum of their times, which is critical for UX.

Stage 2: Convergence Phase (Anonymous Review)

If Stage 1 serves to generate ideas, Stage 2 serves for filtering and quality control. Each model is presented with the responses generated by its peers (and by itself).

- The Key Pattern is Anonymization: Karpathy explicitly states, "LLM identities are anonymized so the LLM cannot favor anyone".

- Research (e.g., "Large Language Models as Judges") suggests that models often exhibit "self-preference bias" - preferring their own output or output from the same model family - or "length bias", preferring longer answers. By removing metadata and labeling the inputs as "Response A," "Response B," "Response C," the architecture forces models to evaluate based on semantic content, not brand reputation.

- Task: Models are asked to rank the responses for accuracy and insight. This transforms LLMs from the role of generators into the role of evaluators (Discriminators). In machine learning theory, discriminant functions are often easier to learn and more precise than generative functions – it's easier to recognize a good image than to paint one.

- Data Flow: This is an operation with complexity

$O(N^2)$in context (more precisely$N \times N$, where each evaluates each). The system collects these critiques, creating a rating matrix.

Stage 3: Synthesis Phase (The Chairman)

The final stage resolves conflict and reduces information noise, much like in classic distributed system algorithms. A designated model (defined as CHAIRMAN_MODEL, default to something powerful like Gemini 3 Pro) receives a powerful Context Window containing the original user query, all candidate responses from Stage 1, and all reviews and rankings from Stage 2.

The problem was then mapped across multiple agents, their "reduction" logic (critique) was collected, and now the Chairman performs the final reduction to a single, high-quality artifact. The user receives a single answer, but it is an answer informed by the collective wisdom of the crowds of the most advanced models on the market. The Chairman sees that "Model A made a calculation error, which Model C noticed," and can correct the final output.

Part III: Code Review and Patterns – The "Meat" for Engineers

Let's now move on to the analysis of the code itself. Remember that this is "vibe coded". We are not looking for perfection of cool solutions and patterns that we can transfer to our production systems here. The main logic is located in the files backend/council.py, backend/openrouter.py, and backend/main.py.

Pattern 1: Unified Gateway

The openrouter.py file demonstrates how to abstract the complexity of the AI ecosystem. Instead of a complicated Factory Pattern with different adapters for Azure, AWS Bedrock, or OpenAI, the code relies on the industry standardization around the "Chat Completions API" format, which OpenRouter exposes.

Conceptual snippet illustrating the pattern in openrouter.py:

Pattern 2: Asynchronous Parallelism (The "Fan-Out" Pattern)

Latency is the enemy of agent flows. If we ran the Council sequentially (Ask GPT-4 -> wait -> Ask Claude -> wait...), a single turn could take minutes.

Karpathy uses Python's asyncio library to parallelize these operations. In backend/openrouter.py, we see the query_models_parallel function, which uses asyncio.gather(). This is critical for "System 2 Thinking" type applications (requiring deliberation). If your application requires "thinking" (many steps), you must parallelize the branches of that process. The result is efficient I/O-bound execution. The Global Interpreter Lock (GIL) in Python is not the bottleneck here because tasks are waiting for network responses.

Pattern 3: Prompt as Code

How do you make an LLM a good judge? You need to "program" it with the right prompt. The code in backend/council.py constructs a prompt that forces a specific output schema for ranking (a grading rubric).

The prompt probably looks like this:

Part IV: Lessons for engineers

What can we learn from llm-council to apply in our daily enterprise work?

Lesson 1: Anonymization is a Feature

If you are building RAG systems or evaluation pipelines (LLM-as-a-Judge), anonymize your inputs. If you use an LLM to evaluate support tickets, remove agent names. If you use an LLM to evaluate code, remove comments revealing the author. Biases in LLMs are subtle and pervasive. Architectural anonymization (Stage 2) is a cheap and effective mitigation (resilience) strategy.

Lesson 2: The "Council" Pattern is the Future of High-Stakes Systems

For low-risk tasks (talking about the weather), one model is enough. For high-risk tasks (medical diagnosis, legal contract analysis, architectural decisions), use a Council. The cost of inference is falling (even if the models themselves are not getting cheaper), but the cost of errors remains high. Spending 5x the inference cost (5 models) to reduce the error rate by an order of magnitude is a rational economic compromise for business applications.

Lesson 3: Code as a Disposable Artifact

This, I feel, is the hardest pill to swallow. Karpathy treats main.py not as a sacred text to be polished, but as temporary scaffolding supporting the AI. In an AI-native workflow, the Prompt (prompts.py) is an asset. The Python glue (main.py) is a liability. We should spend more time on system prompt engineering and interaction protocol (Stage 1 -> Stage 2 -> Stage 3), and less time obsessively caring about the elegance of the API router, which will be rewritten by AI next month anyway.

Lesson 4: Unified Interfaces are Mandatory

If you hardcode import openai, you are doing it wrong. The AI landscape changes every week (and I'm not exaggerating here). Today, Gemini 3 is the best for long context. Tomorrow, it might be Claude 4.5 Opus. Next week, Llama 33. In 2025, do not write your own custom integrations for every model. Use aggregators (like OpenRouter) or unifying libraries (like LiteLLM). The llm-council architecture relies on models being commoditized endpoints. Thanks to this, the "Chairman" can be Gemini today, and Claude tomorrow, without changing a single line of business logic code.

Lesson 5: The Difference Between "Council" vs "Agent"

It is worth noting how llm-council differs from popular "Agents" (such as deepagents or AutoGPT) that we have written about before.

Table 2: llm-council vs. Autonomous Agents:

| Feature | Agents (e.g., deepagents, AutoGPT) | Council (llm-council) |

| Flow Control | Loops, Cyclicality (ReAct Loop) | Linear, Multi-Stage (DAG) |

| Tool Use | Heavy (File system, Search, API) | Minimal (Conversation/Text only) |

| Complexity | High (Context management, loops) | Low (Fan-out, Fan-in) |

| Goal | "Execute the task" (Action-oriented) | "Answer the question" (Cognition-oriented) |

| Reliability | Low (Susceptible to loops, getting stuck) | High (Deterministic flow) |

llm-council IS NOT an agent in the classic sense. It is a Reasoning Pipeline. For many business applications (summaries, decision support), you do not need an unpredictable agent that can fall into a loop. You need a disciplined Council that can act as a "Map-Reduce" for Intelligence.

As you can see, there are quite a few lessons here, so a well-deserved star on GitHub from me. 😉