“I’ve always claimed there’s no better way to learn something than to build something… and the second best is reviewing someone else’s code 😁.”

A slightly sad realization hit us at VirtusLab recently — our “collection” of starred repositories has grown to ridiculous proportions. So we decided to change the approach: every other Wednesday, we pick one trending repo and take it apart piece by piece.

Why not GasTown?

source: https://steve-yegge.medium.com/steveys-birthday-blog-34f437139cb5

Honestly? It was tempting, but I didn’t feel ready to wade through GasTown… at least not yet. It’s simply too much of a beast.

For the uninitiated: GasTown is Steve Yegge’s Opus Magnum from the turn of the year. It’s a full next-gen environment (not an IDE, environment…) where, instead of writing code yourself, you manage a herd of AI agents that do it for you. GasTown is the orchestrator of that chaos.

I truly wanted to do that, but the amount of code (Go), systems, and sheer complexity is overwhelming the moment you run your first git clone.

So I went with “divide and conquer.” Instead of choking on the entire GasTown engine, I pulled out one key component – the thing without which the agents are basically useless.

That component is Beads.

PS: Beads is evolving fast; this review is based on the v0.47.1 version. But all the interesting findings remain true 😊

Ok, so what was this built for, and what problem does it solve?

Anyone who’s tried using Cursor, Claude Dev, or Windsurf for larger tasks knows this pain. The agent starts strong, but after 10 minutes (or ~50 messages in context), it begins to lose the plot. It forgets what it already fixed. It forgets what it was supposed to do next. It hallucinated that the task is “Done.”

Typically, agents cope by creating files like TODO.md or PLAN.md.

The problem: Markdown is just text. For an agent, “updating status” in Markdown means editing a text file, which often ends with the agent accidentally deleting half the plan or creating Git conflicts when two agents work in parallel.

Beads solves this in a brutally effective way. It’s not a “to-do list,” but a distributed, Git-backed task database (an issue tracker) designed not for humans, but for machines.

Main goals:

- Persistence: The agent’s memory must survive a session restart, a computer crash, or even switching the LLM model.

- Graph structure: Tasks have dependencies. You can’t do “Task B” if “Task A” is blocked. Beads enforce that graph.

- Agent UX: This is the key concept. The API and tool output are optimized to use a few tokens and to be unambiguous for an LLM.

Architecture: Git as a Database

Steve Yegge (the creator and former Google and Amazon alumni) went all-in on a “Git-native” architecture.

1. Data Model (The Bead)

In Beads, the source of truth is file-based (Git-tracked). There’s no central SQL server - SQLite is used only as a local read-model cache.

Tasks (issues) are stored in a hidden .beads/ directory.

- Format: JSONL (JSON Lines). Why? Because it’s an ideal format for Git. If you add a new comment to a task, you append a new line at the end of the file. Git merges tend to handle this well and are often conflict-resistant during parallel work.

- ID: Instead of sequential IDs (1, 2, 3) that collide in distributed systems, Beads uses shortened hashes (e.g.,

bd-a1b2).

2. Hybrid Storage (The Performance Hack)

Reading a growing append-only JSONL log on every query would be slow. So Beads uses a hybrid architecture:

- Source of Truth: files in

.beads/(committed to Git) - Read Model: a local SQLite database acting as a cache

When you run the tool, it “hydrates” the SQLite DB from the JSONL files, and then queries like “show me tasks to do” run blazingly fast via SQL.

Code Review: Patterns and Techniques

The project is written in Go (95%). Yegge chose Go for speed, static typing, and easy distribution (a single binary).

Let’s look at the most interesting parts.

1. Agent-First Output (The “Token Economy” Pattern)

This is something we can learn from if we’re building tools for AI. Here’s what the output of the bd ready command (show what’s ready to work on) looks like when an Agent requests it (the --json flag):

Notice the BlockedBy field. Beads implements a topological sort of tasks. The tool avoids dumping all tasks – it can output a focused list of ‘Ready’ (unblocked) items.

Pattern: the tool does the “thinking” for the agent. Instead of making the LLM analyze a dependency graph (which burns tokens and is error-prone), Beads handles it deterministically in Go and serves up only what can be worked on right now.

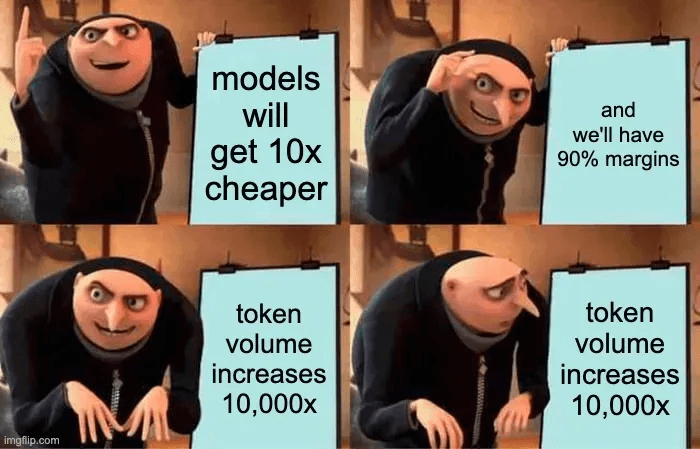

Cause you know… Jevons Paradox is a thing.

2. Idempotency and “Vibe Coding”

In the code, you can see an obsession with fault tolerance. Agents often make mistakes, crash, or get interrupted halfway through generating a file.

Yegge uses an Append-Only Log pattern for a task’s history.

This way, even if the agent “drops” mid-write, the file isn’t corrupted (at worst, the last line needs to be discarded). The change history stays complete (think: ‘git blame on steroids’, but for task state). Each change is recorded as a new JSON line in the shared log, which makes Git merges naturally conflict-resistant.

3. Hash-Based ID Generation (Zero Coordination)

How do you avoid two agents working on two different branches, both creating “Issue #5”?

This enables conflict-free merging of plans. Agent A creates tasks on feature-A, Agent B on feature-B. When you merge into main, both task sets land smoothly, because they’re appended as independent log entries and carry unique IDs.

If the short ID collides, the tool can extend the prefix (e.g., from 4 to 6+ hex chars) deterministically.

What’s worth learning from it?

By analyzing Beads, we’re learning the engineering of the AI era:

- Human UX vs Agent UX: This is the most important lesson. Beads’ CLI interface is “rough” for a human, but perfect for an LLM. Parameters are precise, output is structured (JSON). When building APIs in 2026, you have to ask: “Will Claude understand this output without extra prompting?” It’s the N+1 problem, but for agents.

- Git as a backend: Yegge proves that for teams (and agents) working asynchronously, Git can be a better database than SQL. It lets you branch not only code, but also the state of work (tasks). Because tasks live in the repo, reverting commits can revert task-state changes as well, as long as those changes are captured in the reverted commits. Brilliant in its simplicity.

- Thin Client, Thick Logic: The “what blocks what” logic lives in the tool binary (Go), not in the agent’s “system prompt.” That saves context and money.

Summary

Beads is a small but crucial piece of the GasTown puzzle. It shows that the future of programming isn’t just “smarter models,” but also better tooling for those models.

If you’re building your own agent - or you’re simply tired of TODO.md chaos in your projects - it’s worth taking a look at Beads. Even just to see how an industry veteran solves the long-term memory problem in stateless systems.

Well deserved Github Star from me ⭐