WebAssembly (Wasm) is a binary instruction format and low-level language that runs in various environments, including browsers. While it was originally designed for browser applications, its adoption is expanding beyond browsers into cloud and edge computing environments, due to Wasm's features:

- Secure execution environment.

- Fast startup and execution times.

- Language independent.

- Architecture/OS independent.

For example, the US NIST recommends Wasm for cloud-native security, Microsoft has released tools for creating a secure MCP server on Wasm, and there are efforts to run Wasm workloads directly on Kubernetes for faster startup times.

Scala.js compiles Scala to JavaScript, and now it supports WebAssembly from Scala.js v1.17, using Wasm 3.0 features like WasmGC (Garbage Collection) and Wasm exception handling to generate efficient Wasm binaries.

Currently, the generated binaries can be used for browser and Node.js environments, but we're working on generating a Wasm binary for non-browser environments like cloud (so-called "server-side Wasm") using WASI and the Wasm Component Model.

You can take a look at the current work in the scala-wasm repository: https://github.com/scala-wasm/scala-wasm

This article explains the advantages of compiling Scala to Wasm and the future prospects.

Compiling Scala to Wasm for JS Environments

Scala.js can generate Wasm binaries with a few lines of configuration. See https://www.scala-js.org/doc/project/webassembly.html for more details.

In this section, we will explain the benefits of compiling Scala to Wasm for JS environments.

Performance Improvements

WebAssembly is designed for high-speed execution and claims near-native performance. By compiling Scala programs to Wasm, we can expect better execution performance compared to compiling to JavaScript.

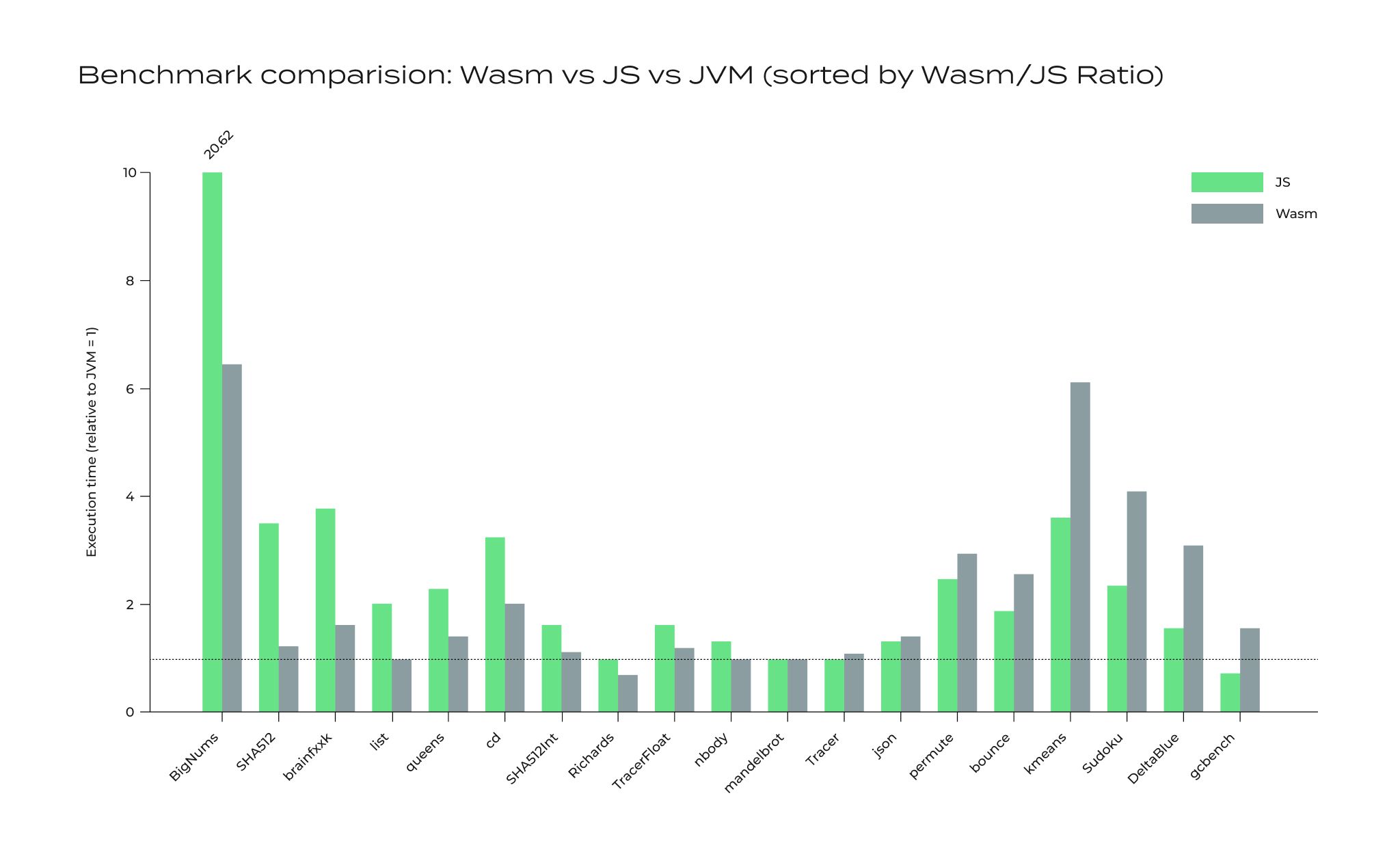

The following chart shows benchmark results from Marr et al.'s cross-language benchmarks executed with Scala.js.

The horizontal line at 1.0 represents the JVM execution baseline, and each bar shows the relative execution time of Wasm and JS benchmark compared to the JVM. Lower bars indicate faster Wasm and JS execution. As the chart demonstrates, Wasm versions show higher execution performance than JavaScript versions in most benchmarks. In some cases, performance improvements of up to 3.3x compared to JS.

Note that Wasm performance heavily depends on application properties. For instance, function calls from Wasm to JS are slow, so applications with frequent DOM operations may favor JavaScript. However, on the other hand, Wasm is great at computationally intensive tasks like cryptographic calculations and image processing.

Wasm-Based Async

Another advantage of compiling Scala to Wasm is the ability to leverage new features provided by Wasm. For example, JSPI (JavaScript Promise Integration) is a specification for efficiently handling JavaScript Promises from Wasm, and Scala.js 1.19.0 provides the Orphan await feature utilizing JSPI. This feature allows js.await calls to be separated from js.async blocks, enabling more flexible asynchronous programming.

Using this JSPI functionality, gears, an async library for Scala 3, now supports Scala.js on Wasm. The following async code should work on Scala.js once the new version is released.

Compiling Scala to Wasm for Standalone Wasm Environments

In addition to generating Wasm binaries for JavaScript environments, we're working on Wasm binaries for non-JS environments (so-called "server-side Wasm") at https://github.com/scala-wasm/scala-wasm.

What are the differences between Wasm binaries and the code generated for a JS environment, and what are the key advantages of "server-side Wasm"?

WASI (WebAssembly System Interface)

The primary distinction lies in how the WebAssembly module interacts with the "outside world."

JS Environment Wasm

It's designed to run inside web browsers or Node.js. To access system resources like files, clocks, or the network, it relies on functions imported from the JavaScript environment.

Non-JS Environment Wasm (with WASI)

WASI is a standardized API that allows Wasm modules to communicate with the outer world in a secure and portable way.

Wasm provides a sandbox environment for safely executing "untrusted code." Wasm offers an execution environment based on the principle of least privilege, where by default, Wasm modules cannot access variables outside their memory space or perform any system operations like file I/O or HTTP requests.

Such system call access is performed through WASI, a standardized interface for system operations. Wasm runtimes like wasmtime provide these WASI interfaces, and by utilizing WASI on the language side, we ensure portability for the Wasm module across different runtimes.

Capability based security

Furthermore, this standardization enables a capability-based security model. Runtimes like wasmedge don't provide Wasm modules with permissions like file access by default. When a program needs to access specific files, the runtime must explicitly grant access to the host filesystem, preventing unintended resource access and enabling secure program execution.

For instance, when foo.wasm reads a file and prints the content, it cannot access the host filesystem without --dir.:..

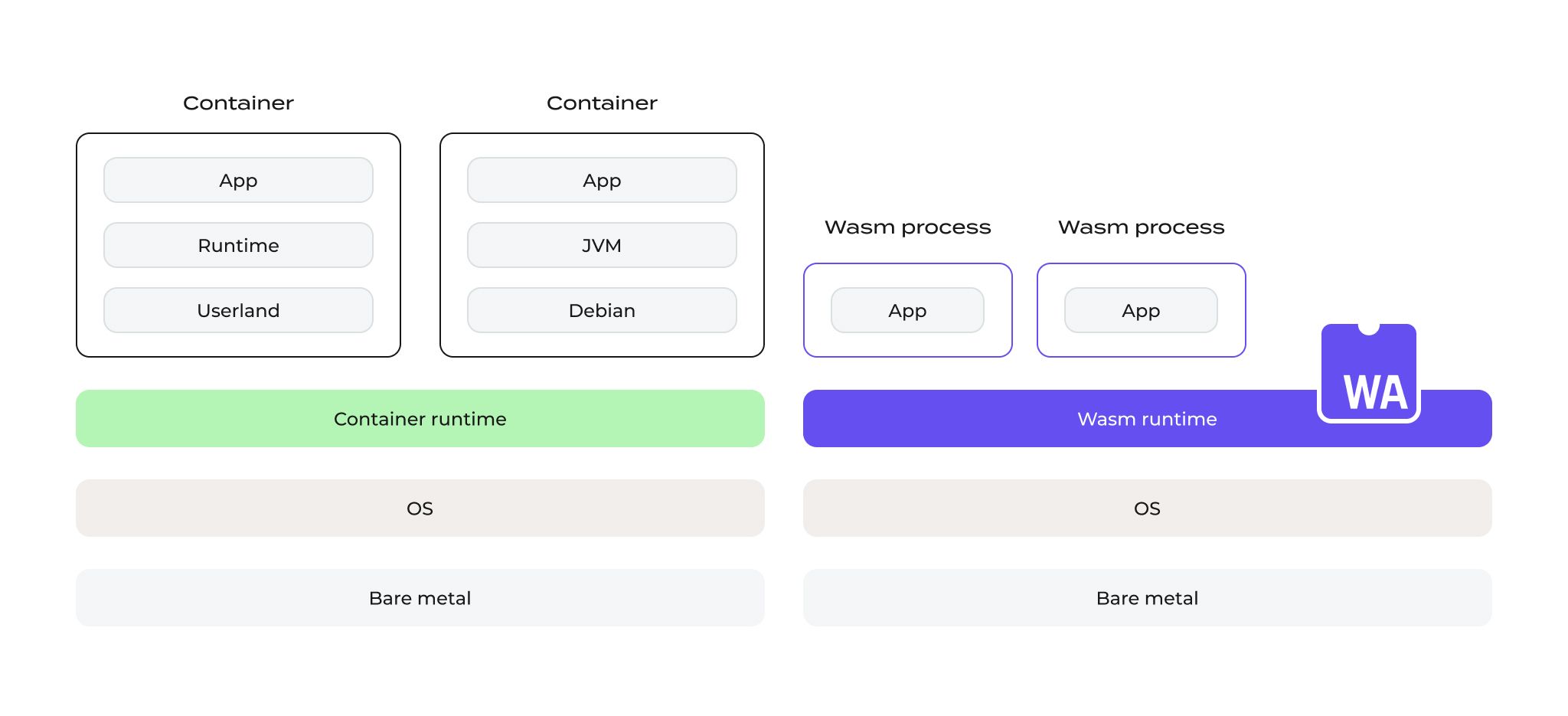

Comparison with Container Technology

Now that we've covered Wasm/WASI, let's take a quick look at the other key technology: OCI containers such as Docker containers. It packages OS userland and language runtimes as container images and isolates resources between containers. However, the more packages included in container images, the larger the image size becomes, leading to slower cold start and increased security risks.

In contrast, Wasm runtimes themselves handle resource isolation responsibilities, separating resources between Wasm processes. Compared to Linux container images, Wasm binaries are much smaller, start faster, and achieve lightweight resource isolation.

In fact, several tools have been released for deploying Wasm binaries without using Linux containers:

- runwasi: A low-level container runtime for directly executing Wasm workloads without Linux containers.

- wasmCloud: A platform for deploying and orchestrating Wasm workloads on Kubernetes.

- hyperlight-wasm: Executes Wasm workloads on ultra-lightweight VMMs, stripping away features required by regular VMs for extremely fast guest OS startup.

Wasm vs. Containers in Scala

To see this in practice, consider a HelloWorld application generated from Scala.js for Wasm/WASI. The resulting binary is 124KB and can be deployed directly without a Linux container. The cold start time is significantly shorter than launching a container; in our environment (OS Ubuntu 24.04.2, Processor i7-8750H x 12, 16 gb RAM), it completed from start to execution in about 20ms.

On the other hand, a HelloWorld application built for the JVM and packaged with Alpine resulted in an image size of about 68MB, with a cold start to completion time of approximately 1 second.

When built into a native binary with Scala Native and packaged on a distroless base, the image size was about 3.6MB, and the cold start to completion time was about 600ms.

| Method | Final Size | Cold Start Time |

| Scala.js (Wasm/WASI) | 124 KB | ~20 ms |

| Scala Native (Distroless Container) | ~3.6 MB | ~600 ms |

| JVM (Alpine Container) | ~68 MB | ~1000 ms (1s) |

These application builds are available at below:

- https://github.com/scala-wasm/scala-wasm/tree/c6db656dec6d68ae7fa4996a5711f0c75e47bad6/examples/helloworld-wasi

- https://github.com/tanishiking/thin-scala-container

- https://github.com/tanishiking/thin-scala-native-container

Wasm Component Model: Secure Language-Agnostic Interoperability

Another crucial technology for Wasm in server environments is the Wasm Component Model. This specification enables safe and seamless function calls between Wasm modules written in different languages.

Cross-Language Integration

This mechanism enables scenarios like compiling a feature written in Rust as a Wasm component, then seamlessly calling it from Scala. To achieve this integration, developers typically use a tool called wit-bindgen to automatically generate "glue code" (binding code) for each language from WIT definitions. An IDL (Interface Definition Language) for defining interfaces between components using high-level data types like string, list, variant, and optional. (Unfortunately, Scala doesn't have wit-bindgen supported yet, so we need to create those interfaces manually).

For instance, the following WIT defining greet function, will generate the following Rust function, or Scala program (in the future).

Calling between Scala and Rust

As an example, let's try calling Rust's ferris-says library from Scala.

First, we define a WIT file that specifies the interface between Scala and Rust. The greeter interface defines a greet function. The Rust "world" will provide the implementation of this interface, while the Scala "world" will consume the externally defined greeter interface.

The Rust implementation looks like this. It simply takes the input string, passes it to the ferris-says function, and returns the resulting string.

On the Scala side, the @ComponentImport annotation maps the greet function to an external function call via the Component Model. The run function, which serves as the main entry point in WASI, then calls this imported greet function.

Build both projects into Wasm Component Model binaries and then use the wac command to compose them. Finally, let's execute the generated Wasm binary with wasmtime.

Awesome! We have now called a function defined in Rust from Scala. While we used Scala and Rust, you can cross-function calls not just with Rust, but with any language that supports the Component Model.

The full code example is available here: https://github.com/scala-wasm/scala-wasm/tree/c6db656dec6d68ae7fa4996a5711f0c75e47bad6/examples/helloworld-component-model

WASI and Component Model

Importantly, the current WASI (from preview2 onward) is defined based on this Component Model. Check out the WIT definitions for WASIp2 at https://github.com/WebAssembly/WASI/tree/8a69f1ed6ce7bfd3cfe72270b787d4d4598b721d/wasip2

For example, the WASI function get-stdout for obtaining standard output is defined like below.

This means that once Component Model support is complete, WASI will automatically be available.

Remaining Challenges for Server-Side Wasm in Scala

Several key challenges remain for Server-Side Wasm in Scala:

Component Model Implementation: The Wasm Component Model support is still in PoC, and wit-bindgen isn't yet available for Scala.js.

JavaScript Dependency Elimination: Current Scala.js-generated Wasm binaries depend on JavaScript, particularly for JDK APIs. These must be rewritten to use pure Scala or WASI instead. The Scala.js test suite has approximately 6,000 tests, with around 4,000 currently passing on server-side Wasm.

Third-Party Library Compatibility: The biggest challenge is that third-party libraries using JS interop for HTTP requests, filesystem access, async operations, or optimizations will require rewrites to use WASI. Libraries depending only on the Java standard library and the Scala library will work without modification, but any JS-dependent code needs migration.

Future Prospects

The Wasm ecosystem is still evolving. As the following proposals are available, Scala on Wasm will become even more compelling:

- Stack Switching: It allows languages to efficiently implement lightweight threads (fibers) similar to Project Loom on JVM.

- Shared-Everything-Threads: This proposal brings full-fledged multithreading to Wasm. Unfortunately, the current Wasm threads proposal doesn't allow programs using WasmGC (like Scala.js) to utilize threads. However this proposal unlocks the multithreading capabilities for GC-based languages like Scala.

Summary

Wasm has evolved beyond browsers into cloud and edge computing, and Scala.js v1.17 now compiles Scala directly to Wasm, offering up to 3.4x performance improvements in compute-intensive tasks. We're working on WASI support in Scala.js in https://github.com/scala-wasm/scala-wasm, which can run securely on standalone runtimes with faster cold starts and smaller sizes than traditional containers. As proposals like stack switching and shared-everything-threads mature, Scala on Wasm will enable even more advanced concurrent programming capabilities.