We always assume the language compiler’s or interpreter’s impeccability when writing code in any programming language. Just like any other program, it might contain inconsistencies with its specifications or subtle bugs.

As the complexity of a program grows, so does the possibility of introducing unexpected behaviors. Scala has one of the most advanced type systems that allow for both safety and problem-solving capabilities. However, this language and its compiler are still evolving. Its new features or improvements can lead to regressions in other parts of the compiler.

So what about Scala 3 and its long-term compatibility plans? You might wonder if the use of the compiler’s latest version might break your current build. In this article, you’ll learn how the Scala 3 compiler detects and prevents compiler regressions, what the Scala Community Build is, and why we have two of them: the Community Build and the Open Community Build.

“This is the first article of a Scala 3 series that we will publish soon. You can expect a follow-up on how to make your libraries work better with the Open Community Build. We will keep you updated on our social media and our blog, so keep your eyes peeled.”

How does the compiler team test Scala?

The main process of testing the Scala compiler consists of two major concepts:

The first is an internal testing framework called Vulpix, allowing the testing of code snippets. The framework uses a strict directory structure, splitting snippets of code into distinct tests. It runs them at the same time, based on the internal configuration that describes the audit preferences.

Vulpix allows you to check either given code compiles, the accuracy of error messages or runtime outputs of the program. It also contains minimized reproduction tests for fixed issues that users have reported. We treat this part as a kind of unit test. Yet, the code snippets are narrow. They’re designed to check a single feature of a language, or a minimized bug that users have found.

The second is the Community Build developed by Lightbend, a testing ground for Scala 2. It’s worth mentioning that it was adapted for Scala 3. The main purpose at this stage is to compile and test real-life projects, or to be precise, some popular Scala libraries.

Currently, every amendment in the compiler is being tested on 73 projects. Some of them are Akka, Cats Effect, Scalatest, or ZIO. Community Build can be treated as an integration test for the Scala compiler.

Unfortunately, both test frameworks have some drawbacks.

Vulpix tests are limited to already reported issues or corner cases that compiler developers submit when introducing features.

Scaladex lists 1066 published Scala 3 libraries right now. However, the Community Build tests only 73 of the most commonly used libraries. This means we can only detect possible regressions based on less than 7% of open-source projects. What’s worse, it needs active maintenance. This results in us not using the latest stable version of a project. Instead, it’s just a fork of a library that might be outdated, or patched by compiler devs.

Projects might also need additional configuration or ignore spuriously failing tests to exclude false-negative results. On top of that, the overall number of included projects needs to be limited. We need to ensure that CI will finish in a reasonable amount of time. Otherwise, it could block the progress of compiler engineers. That’s why the Community Build is used only for the most commonly used Scala libraries.

Increasing the coverage of the Scala 3 Community Build

We believe that real-life projects are the best way of testing a language. The collective amount of ideas, coding styles, and proposals for solving problems, introduced by the Scala community, can support many compiler engineers. Wouldn’t that be great if we could reuse millions of lines of Scala code that we find in public repositories? That was exactly what we thought at VirtusLab when developing the Open Community Build. It’s the next step in testing the Scala 3 compiler!

The main idea behind the new Community Build is to create as many Scala 3 projects using the latest release version with the latest compiler version. They shall publish their artifacts to a private repository and possibly reuse them when building downstream dependencies.

Even though it might seem trivial, it comes with a long list of problems that need to be solved:

- How to enlist possible projects

- How to manage the limited resources

- How to limit maintenance

SIDE NOTE

Currently, with the Open Community Build, we’re able to test new versions of the compiler with almost 900 Scala 3 libraries. This covers ~83% of the Scala open-source community. The launch of the open Community Build was one of the reasons for the delay of Scala 3.2.0 due to a large number of regressions.

How does Open Community Build work?

Disclaimer: from now on, I will refer to the Open Community Build, as Open CB.

Infrastructure and general flow

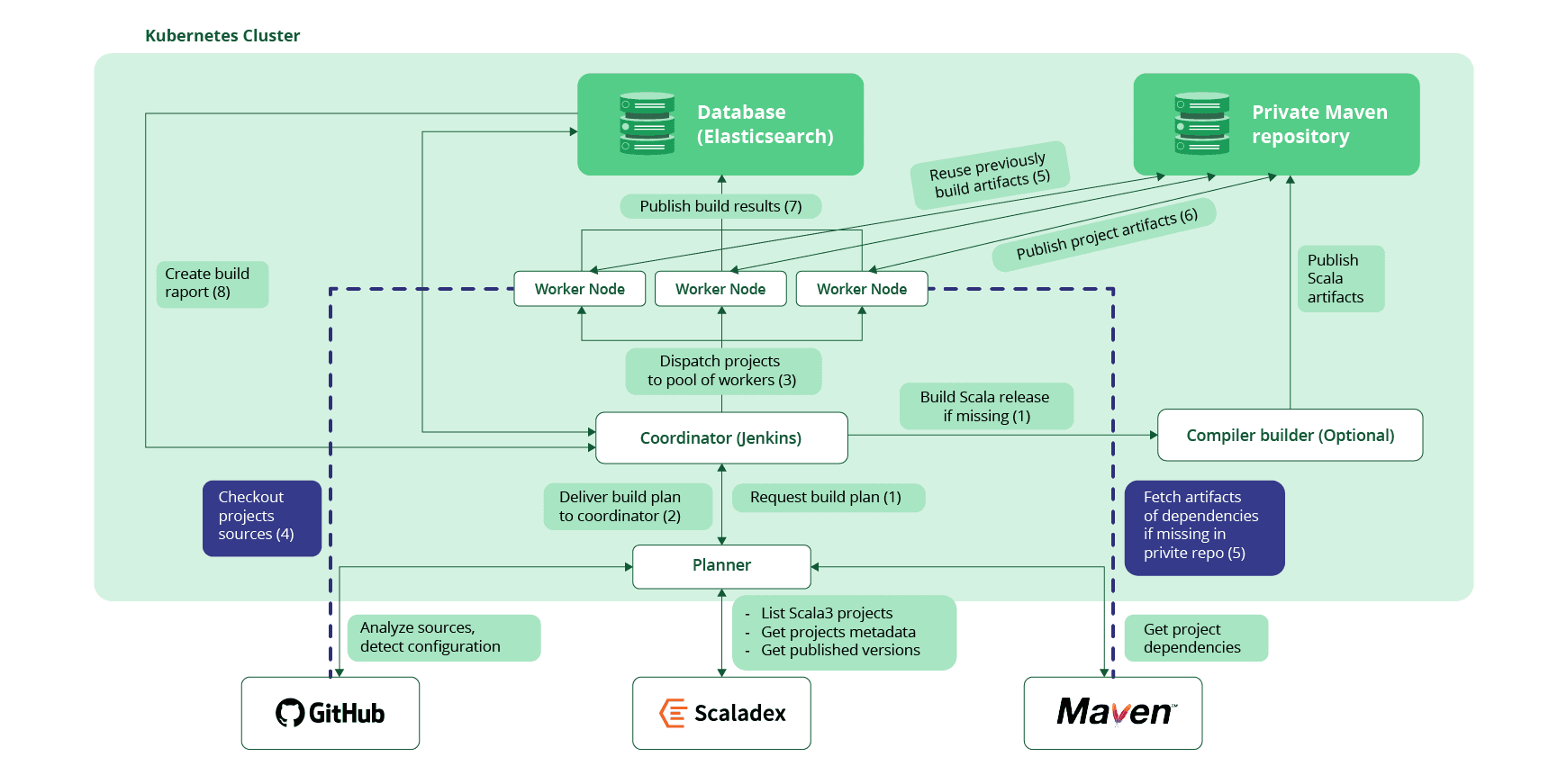

Open CB is based on a few core services run in a Kubernetes cluster. We’ll only show a basic overlay:

- Coordinator node – is the main hub of the build, responsible for dispatching tasks, collecting results, and retrying builds in case of unexpected failures. We use a Jenkins instance managed by Carthago, a commercial version of the Jenkins Operator. Currently, it is also used as a frontend for builds.

- Planner – is a service for creating a detailed plan of a build. It lists candidate projects, builds their dependency graphs, selects a revision of a project to use, and discovers a project’s configuration if missing. The coordinator starts it ad hoc.

- Custom Maven repository – is used to store artifacts of builds for the later use in their dependent projects.

- Compiler builder – builds a compiler and a standard library from sources when testing an unpublished version of Scala, e.g. when testing possibly breaking changes on a specific branch.

- Worker nodes – prepare project sources for the build tool, compile and test selected subprojects. They publish build artifacts to a private Maven repository and the results of the build to the database.

- ElasticSearch cluster – is a database for long-term storing logs and results of the build.

The previous Community Build was tested on every “commit” to the Scala compiler. The Open CB takes a different approach, allowing it to decouple it from the compiler’s main CI process. The typical workflow of Open CB starts with either a manual start of the build in a Jenkins dashboard or a dispatch event after publishing a nightly version of the Scala compiler. The low frequency of started builds allows us to increase the number of tested libraries.

Open CB’s dashboard allows setting parameters of the build. The most important of them include selecting a branch of the compiler, as well as filtering projects, or modifying their default configuration. The arguments, set in the dashboard, are used to create a new build plan, taking into account the information about projects retrieved from Scaladex, Maven, and GitHub. The result of the build plan contains a list of execution stages, based on dependencies between projects. Projects in stage “N+1” have all their dependencies already built in stage “N” or earlier.

The coordinator dispatches the work into nodes available in a Kubernetes cluster, where all projects are being built and tested. It then publishes its artifacts for later use. Finally, it indexes the results and logs of the project in a database. After all the stages are completed, the coordinator generates a build report with a quick summary of failures.

In the next section, we’ll go into detail.

How to create a build plan

We will list certain steps you need to take to create such a building plan in Scala 3. Alongside this, we will name some issues and their solutions.

List candidate projects

Within the Scala community, there is only one accurate listing of Scala projects: Scaladex. Unfortunately, it doesn’t have a decent API that we could use to retrieve the projects list. That’s why we needed to use a web crawling library to extract the list of published Scala 3 libraries, targeting JVM and their popularity based on GitHub stars.

In most cases, we don’t have enough resources to build all available libraries. A full build can take up to 20 hours when using a 3-node cluster. Unless we’re testing a release candidate or possibly breaking changes, we’re selecting only a limited number of projects. For example, we choose the top 100 based on their popularity. In some cases, we can also limit projects by a minimal amount of GitHub stars to exclude completely unknown libraries.

Select the project revision

The names of the projects listed from Scaladex always match the project repository’s coordinates in GitHub. This means it’s easy to find their sources. However, in most cases, we don’t want to build projects using the latest revision from the default branch as they might be unstable. Instead, we try to target the latest release.

If a project is released, we assume it has compiled and passed tests with at least 1 version of Scala 3. If it failed one time, there’s a high probability that we have found a regression in the compiler! To find the version of the last release, we need to retrieve information from Scaladex. However, this time we can use a dedicated API to get details about each project. What’s most important for us are details about the project’s artifacts, versions, and corresponding release dates.

Now we have introduced another assumption to select the project sources’ correct version. In most projects, each release has a corresponding tag in the git repository. For example, release 1.2.3 has a tag with a similar name. We have used this method to filter out snapshots and other untagged releases from Scaladex’s versions.

From the remaining list of versions, we select the latest one based on its release date. In most cases, we build the project from the exact same sources that were used in the latest published library version. If we don’t find any matching tags, then we fall back to the default revision.

Sometimes, the projects might either leave tags out, or the tags would not contain version numbers. It’s also possible that the project was moved to a different repository, without updating the metadata of the build. This results in building a project from incorrect, outdated, or incompatible sources. We fix these issues by explicitly overwriting the repository and the project branch that should be used.

At this point, some projects might be filtered out based on the internally maintained list of libraries with known problems. The reasons include incompatible build layouts, compilation difficulties using any version of Scala, applying an unsupported build tool, or because a given library isn’t a Scala project. Currently, the manual configuration is sufficient for us, but we plan to automate this process in the future for frequently failing projects.

NOTE

By default, we always try to test built projects to make sure that the behaviour of programs is stable in all versions of Scala. However, some tested projects require an external infrastructure for tests. We aren’t able to prepare a dedicated database or allow using testing containers. Due to these reasons, we might need to filter tests by interacting with the build tool or disable the execution of all the tests. We can do it through dedicated entries in the project’s configuration.

Collect subprojects to build

Most of the tested projects are using multi-project builds. This might cause problems for many reasons. For one, only some modules were migrated to Scala 3, or some of them are used only internally. In both cases, we lack assurance if they were successfully compiled in the past. This might lead to unexpected failures, when building subprojects. To mitigate this issue, we would like to limit the scope of the build to projects that have published an artefact.

Most of these tasks will be handled later in worker nodes, based on a target list produced in the planner. We can use a list of artefacts retrieved from its previous step from Scaladex with the corresponding release versions. Later, this will be stored in an easy-to-decode textual format and used by the build tool during the steps that follow.

Create a project’s dependency graph

One of the core ideas behind the Community Build was to reuse built libraries and make sure they are correctly consumed. Yet, we cannot guarantee that the published version of the library will be actually used in downstream projects. But the popularity of automatic dependency updates, using tools like Scala Steward, elevates the probability of using the latest versions.

As stated above, all projects are split into many stages that run sequentially. Each of them contains many projects that can be safely built at the same time. Yet, we first need to create a dependency graph for all the projects.

All libraries follow the same dependency management system. They define POM files and describe the project metadata, as well as the artefacts it depends on. Thanks to information collected from Scaladex, we can look up POM files that are stored in the public Maven repository, as well as their content in XML.

Once we have a map of dependencies for all the projects, all that’s left, is to split projects into non-overlapping groups that form an acyclic graph of stages. Unless, we detect a cycle, due to incorrect data inside build metadata. You need to check such problems by hand and remove problematic projects from the build.

Discover the project’s configuration

The last part of preparing a build plan is preparing a project’s configuration. This includes answering questions, such as:

- Which version of JDK should be used?

- What additional build tool commands should be executed before commencing the build?

- How much memory should be allocated to workers to prevent OutOfMemory errors

- Should the tests of the project be executed, only compiled or excluded entirely

All of this information can be defined using the configuration file in the HOCON format. Currently, we store all of them internally. But in the future, we hope to move them to repositories of the libraries, to make it a real community effort.

Some settings are configured automatically, based on the contents of the git repository. As an example, we can analyse the content of GitHub Actions workflows. We detect which versions of Java were chosen in the JDK, to select a compatible version. Similarly, we can detect custom Xmx settings. They tell us that we might need to allocate more memory to worker nodes responsible for handling this library.

Frequently, we need to prepare small patches for sources. We mostly use it to fix incompatibilities in the build tool configuration or small typos leading to compilation errors. Many projects use exact Scala version literals to introduce conditional changes in the build. For instance, when we define library dependencies that might not be available in Scala 3. To plug this hole, we try to override version literals or variables defined in build files with popular names for version variables. Configured patches will be applied later in the worker nodes.

The prepared plan is finally stored in the JSON files and passed to the coordinator, which will dispatch actual work among the worker nodes.

Execute the plan

After the build plan is done, the coordinator starts dispatching the actual builds using a pool of worker nodes. It grants each project a dedicated container and some resources. The coordinator creates each container with a different memory size, based on the memory request hints for each of the builds.

Such an approach limits the possibility of OutOfMemory errors for big projects and wasting unused resources for the small ones. From now on, Kubernetes handles the resource management until we finish building all projects at a given stage.

After the start of a container, each project is cloned from the git repository. Based on the layout of the project, we can detect, which build tool needs to be used – sbt or mill. These two build tools cover 99% of projects we’re interacting with. Each needs special handling, which we’ll get into detail now.

Supporting sbt builds in Scala 3

sbt is the most popular build tool for community projects. Even though developers have mixed opinions about this build tool, it’s quite easy to work with. However, we need to make sure the version of sbt, used in the project, matches the minimum required version. In our case, it’s 1.5.5. If the requirement isn’t fulfilled, we will enforce a newer version of our choice.

Next, we apply the patches to sources discovered during the preparation of the build plan. It’s an easy step to perform, using regular expression replacements.

Interestingly, we drop the direct interaction with a project using typical sbt commands like `compile` or `test`. It wouldn’t allow us to optimally collect results or introduce advanced error handling. Instead, we define a custom sbt plugin. It is included in the `project` directory along with the custom commands appended to the `build.sbt`.

The plugin allows us to access the internals of the sbt, especially its state and extracted project references. We use this data to iterate all the projects, as well as access and modify their settings or tasks. Based on the data retrieved from the sbt state, we map the names of the published artefacts to the actual project references. To make sure that the order of building subprojects is stable, we select them based on their dependencies. This time, we don’t need to worry about the cycles in the dependency graph. By design, it’s impossible to create them in the sbt.

sbt, due to its orthogonal design, is great for updating some settings directly in the plugin, for example by editing projects or global settings. This feature is used to add endpoints for our private Maven repository and to prevent the publishing of artefacts to public domains.

However, some settings you might need to edit on-demand. One of the cases is the failure of builds that you can fix easily with the `-source:3.x-migration` flag. You can detect it in logs and force a retry of the build or override a `crossScalaVersions` to mitigate limitations introduced in sbt 1.7.x. These dynamic changes to the builds are handled by custom commands, which are executed before running the main build.

With all the configuration in place, we start to actually build ordered subprojects using a dedicated command. Therefore, we take a list of targets we want to build and an additional configuration string. By mapping target strings into the corresponding `ProjectRef` we access the exact `TaskKey[T]` we’re interested in, e.g. a `project/Compile/compile`. We can evaluate them later by using sbt internal API.

We do evaluate up to 5 tasks for each of the projects:

- `Compile/compile` – a compilation of main sources

- `Test/compile` – compile tests unless compilation of tests is disabled if the previous command failed

- `Test/test` – execute tests unless their compilation failed or their execution is disabled

- `doc` – run scaladoc unless compilation failed

- `publish` – publish artefacts to the private repository to allow their usage in the downstream projects, unless `doc` and `compilation` failed.

The evaluation of each task yields information if it succeeded, failed or was skipped due to previous failures. Some tasks yield additional data. These can be later post-processed to gather further information, such as:

- The number of compiled files

- The number of errors or warnings

- The size of the produced documentation, etc

Finally, the summarised build results for all projects are collected and sent back to the coordinator. They are stored in the database for later use along with the logs of the build.

Supporting mill builds in Scala 3

In sbt we had the flexibility of updating the build’s configuration. However, the mill is rigid by design. The only way to set the tested version of Scala 3 used by mill, is by editing the `build.sc` source file. When we tackled this possibility, we ran into a challenge. However, we came up with a decent solution.

Each of the build.sc files is a valid Ammonite script and a valid Scala file. We suggest designing a set of scalafix rules that will override the default definitions of the Scala version and other settings. We do the same when detecting a `PublishModule` and `CoursierModule` to update the versions and repositories for the produced artefacts or `Cross` that would map the original cross version and include the used version of Scala as well. Even though this is not bulletproof, it works for the majority of mill projects, as they typically tend to have a simple structure and relatively low complexity level.

After resolving the issue with the configuration of projects, we use the same pattern of execution as in sbt, including:

- Mapping artefacts to mill modules

- Arranging them by internal dependencies

- Evaluating common tasks by using a dedicated evaluator

- Collecting a summary of the build

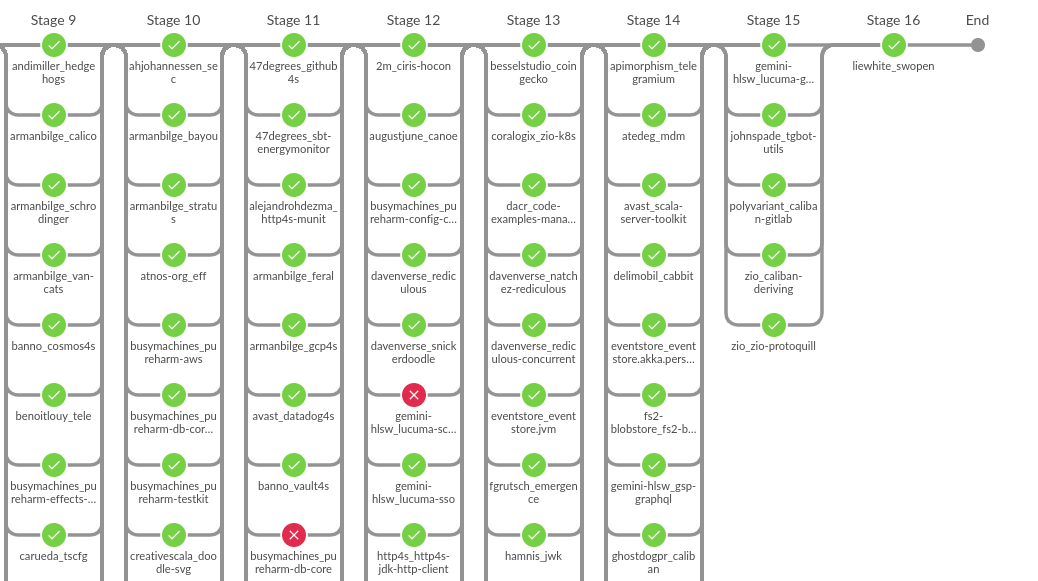

Collecting the results of projects compiled with a new version like Scala 3

The execution of the full Community Build, involving all ~900 available projects, takes up to 20 hours. Manual verification of the results might be a time-consuming task. Jenkins will generate a graph with the results of the build, but it isn’t very useful in quickly analysing causes for failure.

Instead, we run a query to Elasticsearch at the very end of each build, and generate a simple textual report involving basic information about the build. We also get a simple list of failed projects and tasks. You can also use our database for subsequent queries of the build’s history, which is very useful in comparing the introduction of the given regression.

Graphical overview of the Open Community Build results

Impact of the Scala 3 Open Community Build

We believe the Scala 3 compiler version should be treated as any other external dependency. We should always try to use the latest version whenever possible. The latest versions of Scala should improve performance or fix security vulnerabilities. Basically, bumping up the version shouldn’t be associated with troubleshooting, especially in terms of Scala’s minor versions.

Our latest results show that you can compile and test at least 95% of libraries published with Scala 3.0.x or 3.1.x without any problems when using Scala 3.2.0-RC versions. Even though libraries are just the tip of the iceberg of Scala codebases, they present a perfect overview of different applications.

Of course, regressions might still happen. Thanks to a big coverage and an enormous amount of tested sources, we can detect them, and fix them before publishing the next version of Scala. This should allow a smoother migration into the latest versions of the language and adopt new amazing features.

As a small heads up, updating to newer versions might give you a bunch of new warnings. But in most cases, they shine a light on unsafe or possibly harmful code. What’s more, most of the warnings are easy to fix and can be rewritten automatically using one of the compiler features.

As you could see, we’re not able to test all open source libraries, yet. Almost 17% of them needed to be filtered from the candidate lists due to problems with misconfigured build tools, source breaking changes or incorrect metadata published with artefacts. In the future, we would like to decrease this number.

We’re already planning a follow-up article, in which you’ll learn how to improve your build by making it compatible with the Community Build with minimal effort. Including your project in it is a profit for both: you, as you can be sure, that updating the Scala version is safe, and the compiler team, so you leave the compiler bug-free.

If your organization is using Scala 3 on its proprietary but would like to test it against changes in the compiler, please drop us an email at `scala@virtuslab.com`.