Earlier this year, we explored a simple but stubborn idea: that the same type systems that protect our applications could also guard our infrastructure. We called it TypeOps.

Our first prototype has proven that principle - a small system of AWS Lambdas deployed only if their code, configuration, and interface contracts agreed at compile time. It was a promising start, but as anyone building enterprise software knows, functions aren’t where the real complexity lives - services are.

Today, we’re taking the next step in the evolution of TypeOps: bringing type-safe integration to the level of full microservices. This generalization of the original design opens the door to something larger – a platform that can grow into an ecosystem of SDKs, plugins, and protocol connectors.

The problem of the in-between

The services we build rarely exist in a vacuum – they both offer and consume APIs of other services and work in unison with each other. The main premise of microservices was, however, the possibility of uncoupled evolution, the freedom of rapid deployments free of expensive and difficult locksteps. These two realities pull our services apart in contrary directions and turn “the in-between” into a war zone.

As engineers, we have found a solution for this problem using languages and technologies that describe contracts and ensure their compliance, like Protocol Buffers, Smithy, OpenAPI, or Pact.

These solutions helped to rein in the breakage in “the in-between” zone immensely by offering a lingua franca for services, but they never fully eliminated the risk of things going wrong should a human mistake occur. Despite the progress, these specifications live slightly beside our systems, not within them. They describe what should happen, but they don’t constrain what does.

An OpenAPI file might declare a /checkout endpoint, yet nothing stops a developer from renaming a field, forgetting to regenerate a client, or deploying an outdated image that still speaks the old dialect. Our tooling can validate payloads at runtime or flag mismatches in CI, but by then, the damage is already done - we’ve crossed from compile time into incident response.

What we wanted was a way to bring these contracts back into the type system itself - to let the compiler participate in the same verification loop that already guards our application logic. That’s what led us to extend Yaga: to make the in-between part of the type graph.

Closing the gap one OpenAPI at a time

We started with the most common lingua franca of microservices: HTTP and OpenAPI.

By moving OpenAPI contract verification into the deployment code’s compilation process – rather than leaving it as an opaque CI step - Yaga can now ensure that every service, client, and infrastructure definition still speaks the same protocol version. So how does this work in practice?

Since we are extending the mechanics known from the previous post, there must be a build tool that will orchestrate compilation, track dependencies between services, and help to extract service metadata at the correct point in time. There also has to be a technology that will allow us to easily obtain the OpenAPI specification of HTTP endpoints that any given service wishes to expose. This is where SoftwareMill’s TAPIR library shines, as it allows us to describe endpoints as data and then translate them to actual request handlers, HTTP client calls, and OpenAPI documents. Moreover, TAPIR has its own tool to compare the compatibility of OpenAPI schemas. With these capabilities, our quest for type-safe integration is greatly simplified.

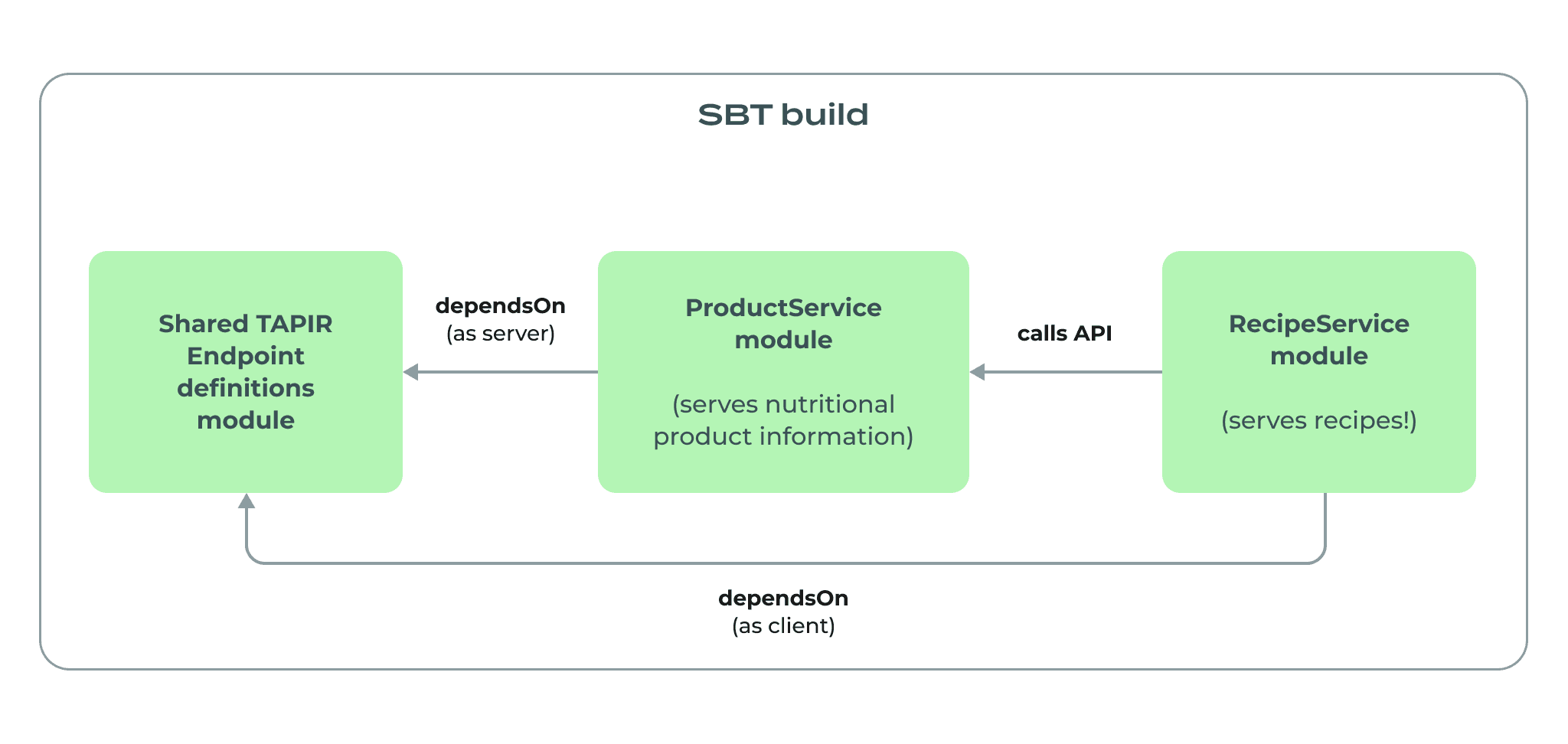

Let’s see how that works out in a small system composed of two services – a product service that provides nutritional information about various foodstuffs and a recipe service that provides cooking recipes along with the nutritional value of the complete meals. In this scenario, the recipe service is going to consume the API of the product service. The module composition in the sbt build looks like this:

This is a familiar setup for any Scala developer that used TAPIR extensively in the past - the endpoint definitions live in a separate module and services that either expose them as their API or that consume said API. This is how we’d define this in build.sbt:

The shared endpoints module depends on the tapir-core library and Circe integration for deserialization of request bodies and serialization of response bodies. OpenAPI-based services depend on that module and add dependencies on server and client interpreters that allow them to serve and consume HTTP APIs. There’s nothing out of the ordinary here, and this setup gives us a lot of safety already, as it allows developers to share the code that is used to both establish the service and to use it.

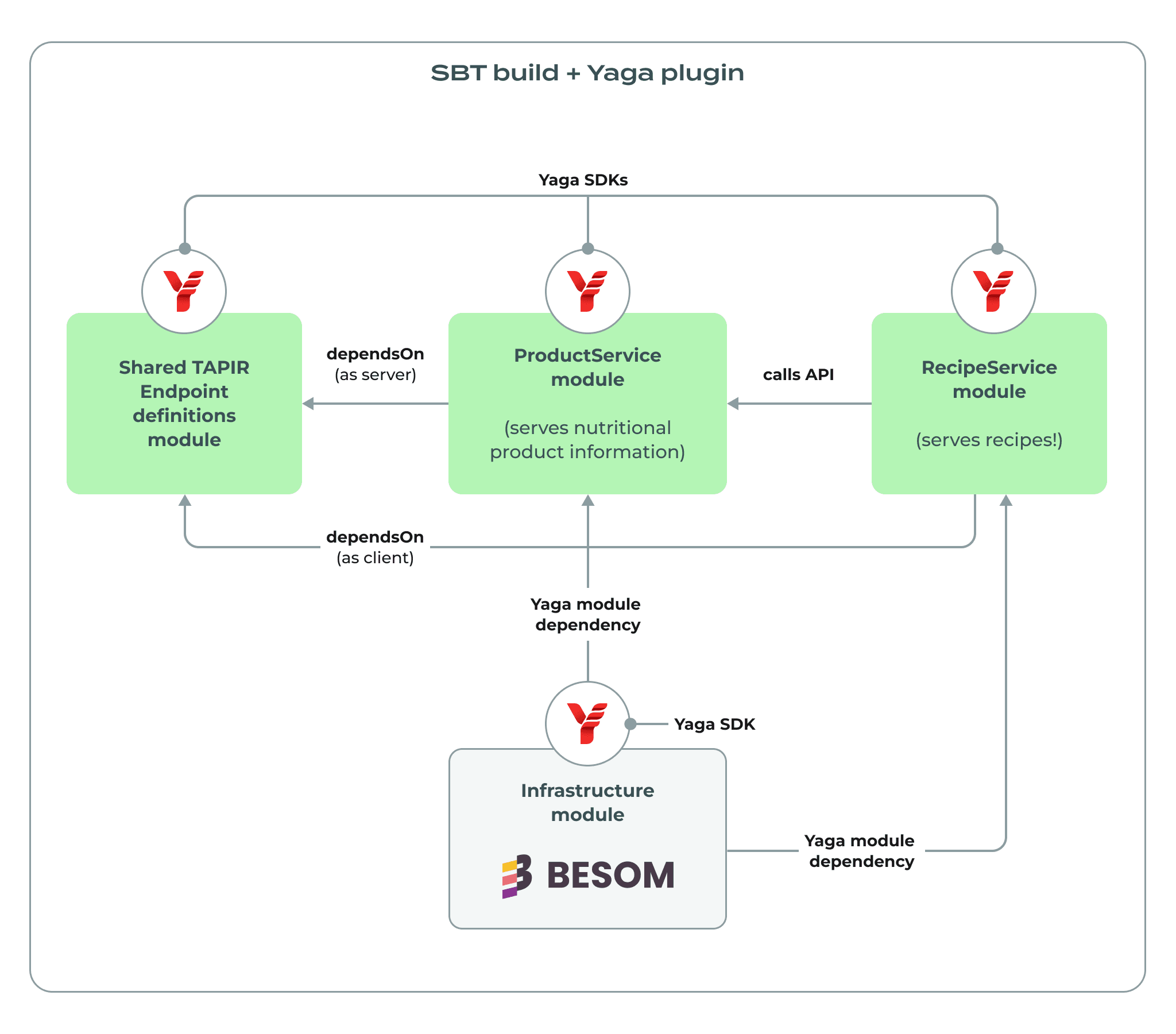

Services, however, have their own lifecycles, and it’s still up to developers to synchronize the changes with deployments when evolving the API, deprecating endpoints, and modifying them. Part of that headache can be addressed in a monorepo where incompatible changes surface in global compilation soon enough to prevent an incident, but monorepos come with their own set of problems and expenses. We can, however, improve upon this state of the art by introducing integration between these services and the declarative infrastructure layer that defines how things will look like when deployed. This is where Yaga steps in and provides additional checks that make things truly bulletproof. Let’s see how the inclusion of Yaga and Besom infrastructure layer changes our build. We start by adding the Yaga plugin for OpenAPI services and Kubernetes runtime in project/plugins.sbt:

Then we can extend our module declarations, starting with endpoints:

The .yagaOpenApiEndpoints extension modifies the configuration of the module, allowing us to capture the endpoints as they are defined simply by adding a derives ExtractEndpoints clause to the object that holds our TAPIR endpoints:

With this, each service will be able to expose the information about the OpenAPI interface it exposes or expects. There’s not much more to do to yagify the sbt modules of our services:

The most notable inclusion is the use of .yagaOpenApiK8sService and .yagaOpenApiClient extensions and the removal of some of the general TAPIR dependencies, as they are going to be provided by Yaga. The first one allows us to select the kind of server implementation we’re looking for – in this example, the Netty-based synchronous, Loom-enabled server. The second provides a way to safely consume the OpenAPI interface through Yaga’s shim. The application’s entry point becomes:

There are two very important bits here – first is that we define the configuration and external services we need for this application to start. The second is that our application extends the NettySyncServerApp provided by Yaga, and we parameterize it with our required configuration type. This trait has an abstract method called serverEndpoints left to be implemented by the user. This method receives a resolved configuration datatype instance that is built from information injected transparently from the infrastructure. It also expects that the user will provide a list of implemented server endpoints that is then used to extract the information about the endpoints the application actually serves.

Our required configuration contains something very interesting too: an OpenApiServiceReference parameterized with the type of the shared object containing TAPIR endpoints - ProductsEndpoints.type. Through this reference, Yaga knows that to deploy the RecipesService, one has to prove that there’s some service that fulfils this requirement on the infrastructure level. But our type-safety goes beyond that - you can use this OpenApiServiceReference to directly call the remote service without configuring the client in any way, and it uses the Sttp library too, so it automatically works with any Scala stack there is! Here’s an example:

We do this for our RecipeService too, and then we provide the images to the generated Besom component resources that encompass a Kubernetes Deployment and Service resources for us:

These generated classes are strongly typed and mirror the requirements defined in the applications themselves. Furthermore, Yaga syncs any changes as they happen and guards the user from getting into a state where there’s a drift between what applications declare as a requirement and what they will receive as their configuration during the deployment. The most important bit, however, is that these two microservices are now linked together via a typed ServiceRef that fulfills the requirement of a service that will provide ProductsEndpoints. This relationship is verified for compatibility in the compilation of the infrastructure layer, and this enables the user with the elasticity of being able to use different versions of these services in the infrastructural blueprint and still getting information about whether they are compatible with each other statically, before even thinking about rolling them out to production.

This makes our final setup look like this:

Once we run pulumi up, our services get packaged, published as Docker images, and deployed with their relevant configuration injected automatically, and any discrepancy is turned into a compile error that prevents the deployment from happening.

The backstage of microservice orchestration

Yaga is, in itself, a glue code framework and the general idea of extracting metadata from artifacts, generating resources for the infrastructure layer, and then verifying compatibility to guarantee that the contract will hold after deployment hasn’t changed at all.

The new thing is how Yaga extracts information about the interface and requirements of your services. Scala 3 has a relatively unique feature among languages called TASTy generation. For all classes compiled with Scala 3, the compiler will also generate Typed Abstract Syntax Trees that contain a complete set of information about the shape of the code. This feature enables a plethora of interesting capabilities, like the ability to use Scala 2.13 and Scala 3 code together. Yaga, however, uses it to look up and extract the shapes of data types that the user defined as the containers for configuration and links to other services necessary to run the application.

The huge benefit here is that TASTy stores all information about these types and therefore enables Yaga to migrate rich types between application code and infrastructure code. This leads to a reality where an enhanced validation of the provided configuration can be done very early, before any changes to the infrastructure are applied, using the popular and familiar refined type libraries for Scala, like refined or iron.

The new module, built to support the OpenAPI protocol, helped to pinpoint the exact set of core Yaga features necessary to facilitate the development of further integrations. These integrations are necessary to fulfill the vision of an ecosystem where any kind of cloud-based system can be composed safely out of building blocks that together provide an ergonomic and safe environment.

Foundations of the ecosystem

Yaga’s usefulness depends on how effectively it can help teams build production-grade systems that integrate seamlessly with cloud services, external resources, and APIs. To reach that point, where any arbitrary architecture can be expressed freely, two things have to happen:

- An integration SDK allowing to build integrations has to be made as easy to use as possible, ideally by leveraging the synergy between deterministic code generation and modern LLMs.

- A critical mass of integrations available out of the box has to exist.

Luckily, it turns out that fulfilling the first requirement makes the second one rather easy to solve, and that’s why our focus shifts towards making Yaga as accessible and easy to extend as possible.

There’s also the question of practicality - any type system used in production has to have escape hatches that allow users to bypass the constraints in situations where urgency is of the utmost importance. Besom has been extended recently with a new feature allowing users to override or modify composed resources that are parts of a component to facilitate these use cases, and while this feature is already available in the 0.5.0 release, it’s undergoing final touch-ups and will be announced and documented in the upcoming 0.5.1 release.

Yaga is also going to leverage the newest developments available in the Scala ecosystem and integrate WASM support to make Scala more enticing for the use cases that demand quick startup times and low resource overhead.