At VirtusLab, we’ve long claimed there’s no better way to learn than by building—and right after that comes analyzing someone else’s code. That’s why in our “GitHub All-Stars” series, we take “new or little-known” open-source gems that solve real engineering problems and put them under the microscope.

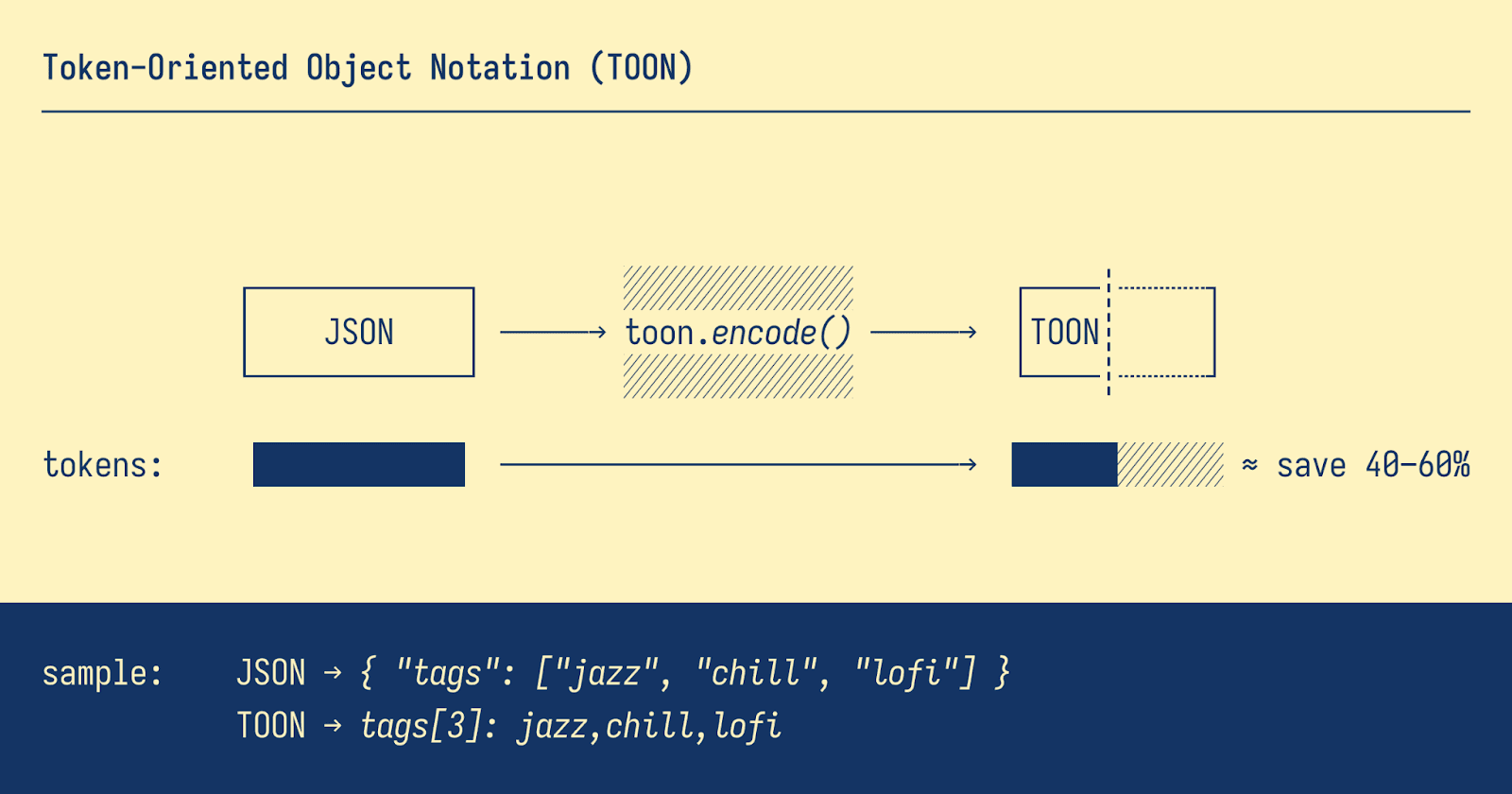

Today, we’re looking at toon —a tool that directly tackles the financial and performance overhead of data serialization in the age of AI.

The Invisible Tax of a Token-Based World

We’ve all been there: staring at a swelling cloud bill and wondering how a simple chatbot function managed to burn more resources than a production database.

Well, everyone who’s actually built this kind of thing.

The culprit (okay, one of the culprits) is often the invisible currency: the LLM token.

Even though AI is getting cheaper (a topic that is debated in its own right) and context windows are growing, every token still has a price. That cost is not only monetary—it also affects latency and context-window usage. JSON, the lingua franca of the internet, turns out to be extremely “chatty” for LLMs: lots of extra punctuation ({}, ``, ,, "), and above all, the repeated keys in arrays of objects, create massive token overhead.

And potential for errors

Over the years, we’ve seen several alternatives to JSON, but their key selling point usually remains human readability. toon approaches the problem differently. While it remains human-readable, it’s compact and purpose-built for passing structured data to large language models, radically reducing token usage. Its value is obvious at a glance.

The table below, based on benchmarks from the project’s documentation, shows real savings. Measurements were taken using a modern tokenizer (o200k_base), used by models like GPT-4 and newer, which makes them relevant to today’s use cases.

Table 1: Token Usage Comparison: JSON vs. TOON (lifted from the README)

| Use case | JSON (tokens) | TOON (tokens) | Savings (tokens) | Reduction (%) |

|---|---|---|---|---|

| Simple user object | 31 | 18 | 13 | 41.9% |

| User with tags | 48 | 28 | 20 | 41.7% |

| Small product catalog | 117 | 49 | 68 | 58.1% |

| API response with users | 123 | 53 | 70 | 56.9% |

| Nested configuration | 68 | 42 | 26 | 38.2% |

| Large dataset (50 records) | 2159 | 762 | 1397 | 64.7% |

The authors state that the savings are not accidental, and cost reductions often exceed 50–60%, which is a big shift in LLM economics.

The project’s creator is Johann Schopplich, an experienced developer whose open-source portfolio focuses on tools that improve performance and developer experience. Interestingly, another of his popular projects, tokenx, is a fast tool for estimating token counts. That synergy is no accident. Johann first built a tool to measure the problem (token cost), then created a tool to solve it (toon).

Creating demand is like the best marketing out there.

toon Deconstructed: An Engineer’s Guide to Syntax and Architecture

toon rests on two principles: determinism and minimalism. Formatting is designed to be predictable for machines and concise for humans. The canonical rules are simple: 2-space indentation, no trailing spaces, and a specific format for simple types (key: value) and nested objects (key:).

Deep Dive into the Syntax

Let’s compare key toon constructs with their JSON counterparts.

Objects and Nesting

A simple object in JSON needs braces and quotes. toon removes that noise.

JSON:

toon:

Nesting is done via indentation—a pattern familiar from Python or YAML, and thus intuitive for developers.

JSON:

toon:

Three flavors of Arrays

toon’s real power shows in how it handles arrays. The format offers three different strategies tailored to data structure.

1. Primitive arrays (linear): For simple lists of values, toon uses a concise, comma-separated syntax. tags: admin,ops,dev

2. Tabular arrays: This is where the biggest savings happen. Instead of repeating keys for each object in the array, toon declares them once in a header.

The syntax key[length]{header1,header2}: is extremely efficient.

JSON (117 tokens):

toon (49 tokens):

3. Mixed/heterogeneous arrays: When an array contains objects with different keys or mixes objects with primitive types, toon uses a dash-list format (-). It’s more verbose, but it provides flexibility and resilience for irregular data.

toon is more than compression. Its syntax includes elements designed specifically for reliable interaction with language models.

- Explicit array length (

items): Not just metadata, but a hint to the LLM telling it exactly how many items to generate. This reduces the risk of incomplete or truncated outputs and simplifies validation. - Explicit field list ({

sku,name,qty,price}): Acts like a schema declaration, guiding the model to produce objects with correct fields in the right order.

toon smartly balances machine efficiency (token reduction) and self-validation capability.

Key Patterns: Thinking in Token-Efficiency Paradigms

A few patterns from the project:

Pattern 1: Declarative data via indentation

Rather than using imperative symbols ({, }) to define scope, the data structure is declared visually via indentation. This places toon within a broader trend of declarative tools (like YAML or… Python) that dominates the modern engineering ecosystem—especially the AI-adjacent parts.

Pattern 2: Compression via tabularization

The tabular format is a practical application of the DRY (Don’t Repeat Yourself) principle in data serialization. While JSON repeats keys in every row, toon is uniquely optimized for this common data pattern—particularly useful in LLM prompts, where context is often provided as an array of examples.

Pattern 3: Self-describing payloads and extensibility

toon lets you define custom separators directly in the array header (e.g., tags[3|]:), which removes ambiguity (looking at you, CSV). This is crucial when serializing text data that may naturally contain commas.

In short, rather than relying on the probabilistic nature of LLMs to correctly parse structure, toon shifts responsibility for structural integrity from the (fallible) model to a (deterministic) data format. It’s a kind of “prompt engineering”… at the data-serialization layer—the model is constrained by the data structure, not just by instruction text.

Conclusions: A Building Block for Protocols

toon is an elegant, engineering-minded solution to a fundamental low-level problem—token costs—bringing tangible benefits in transmission optimization and self-validation. Its syntax and design patterns show just how valuable data optimization is at the AI interface.

As AI becomes a foundational layer of the tech stack, optimizing the interface to AI becomes as important as optimizing algorithms or database queries. Projects like toon are at the forefront of this new discipline, treating data format as a critical element of system performance and cost efficiency.

toon is not a universal JSON replacement, nor should you think of it that way—it was built specifically for LLM contexts. Its limitations, such as requiring identical primitive-valued keys in tabular arrays, are conscious trade-offs for maximum efficiency in its target use case. And you know how it goes - if something is good for everything, it’s good for nothing.

A well-deserved GitHub star. 😉