We all know that “AI can code”, but using code assistants in real, long-run commercial projects is not as simple as just dropping a simple prompt and vibe (i.e. hoping for the best). Mature systems come with layers of dependencies, business rules, legacy constraints and various integrations - all of this makes the idea of “just letting AI code” a bit risky.

To see how it works in reality, we ran a focused, half-day AI hackathon inside an active commercial project - a large-scale logistic platform built in Scala and deployed on Kubernetes. Our goal was to see how AI can be used responsibly in a real, mature system and explore its applications and possibilities directly in our project domain, making sure the outcomes were practical, safe, and genuinely valuable.

In a few hours, we turned ideas into working results - not by trying to make AI replace developers, but by letting it support the work - speeding up routine tasks, improving existing tools, and helping prototype new ideas faster.

Here’s what worked, what was possible, and of course - what failed.

The project and setup

The project is a large-scale microservice-based system in the logistics domain, deployed on Kubernetes in Azure. Its backbone is a Scala-based backend, which carries the core business logic, and a few React-based UIs complement it.

We have access to a variety of leading AI tools (Claude Code, Cursor, Junie, etc.), all of which are configured under subscriptions to ensure that workspace data is not used for model training - a crucial consideration, given the sensitivity of project information.

On the development side, most participants already had a preconfigured set of MCP servers:

- Playwright MCP for browser automation capabilities;

- Context7 for pulling up-to-date, version-specific documentation, and code examples;

- Metals MCP for Scala support. (If you are more interested, see "A Beginner's Guide to Using Scala Metals With its MCP Server").

Preparations

To keep the hackathon well-structured, we prepared a set of proposed tasks in advance, each described in one or two sentences. These tasks were grouped into several categories:

- Low-hanging fruits: relatively simple improvements with quick implementation potential, though not critical for the project (e.g., updating non-prod utility scripts).

- Regular tasks: standard features or fixes that could be implemented without deep knowledge of complex business logic (e.g., adding a new endpoint).

- Medium-difficulty tasks: initiatives of uncertain necessity but worth experimenting with to test AI’s potential, even if only for exploration or fun.

- Complex tasks: high-effort challenges requiring significant investigation and business logic knowledge, where we were doubtful AI could realistically deliver a complete solution.

What was good

Mix of small utilities, service improvements, and tooling enhancements - all showing how AI can bring quick, practical value to real project work.

Non-production utility scripts

Quick wins landed fast: during the hackathon, we generated a number of small helper scripts written in Scala, each designed to save time and remove repetitive work. They were intended for non-production environments, focused on enhancing developers’ day-to-day work.

Two examples illustrate this well:

- A script that streamlines database access by opening SSH tunnels with just a few simple arguments, working on all available environments in the project.

- A project-specific script that connects to a database and searches definitions for a specified “rule”, returning matching entries with clear CLI options and structured output results.

Both were generated in minutes, followed the same pattern as our existing scripts, worked exactly as expected, and proved immediately helpful.

In my opinion, this is one of the most convenient and safe uses of generative AI - producing quick, reliable utilities that make developers’ everyday work easier.

Improvements in supporting service

Another positive outcome was work on a small but still important side component: a Prometheus alert manager webhook receiver that integrates with ServiceNow.

While the entire system is written in Scala, this single service is implemented in Go - a language our team doesn’t normally use. Since the webhook changes very rarely, it has been left with a few outdated dependencies for quite some time.

During the hackathon, with the help of AI, we successfully updated those dependencies and even introduced some refactoring (e.g., structured error logging), all in a language that was unfamiliar to us.

Enhancing code quality tools

We also experimented with integrating Scalafix, a refactoring and linting tool for Scala, into our project. Since we use Bazel as our build system, the setup required some adjustments. The first AI-guided attempt failed because the CLI wasn’t installed, but after adding the missing tools, the assistant quickly recovered, completing the integration and even generating the configuration file.

What could have been a tedious setup turned into a smooth process. Scalafix can now be run directly through Bazel on specific directories, for example:

It’s a small step, but one that both improves code quality and demonstrates how AI can effectively support developer tooling.

Low-hanging fruits

We also focused on several low-hanging fruits - easy tasks that rarely sit at the top of anyone’s mind. The hackathon created the perfect space for AI to tackle them. A good example was adding cross-links between related items in the UI, which made navigation between connected records much easier. These improvements were straightforward to implement and not critical to the project, yet they worked right away and delivered clear usability benefits.

What was possible

Beyond the minor improvements, we looked at how AI could help with larger tasks, including building features that span both backend and frontend, turning existing data into visual insights, and even rethinking deployment workflows.

Project-specific full-stack feature

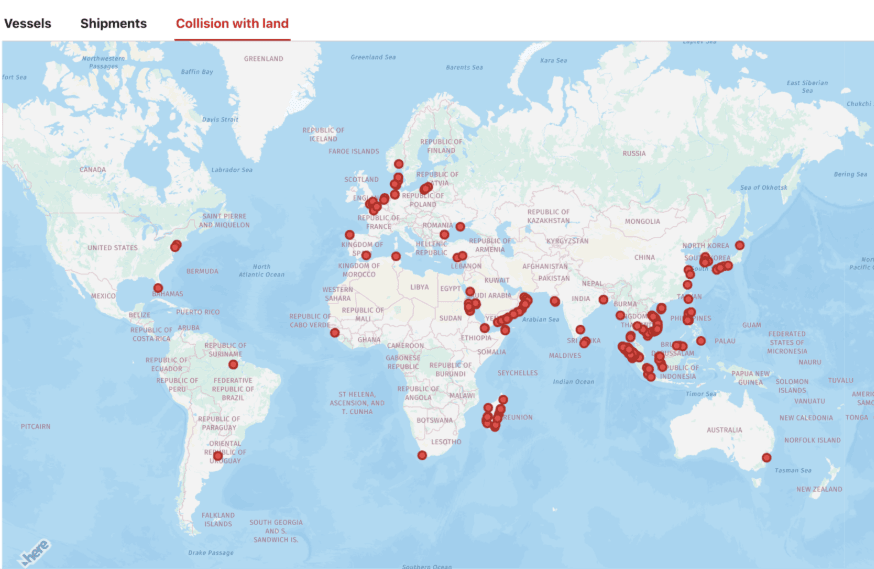

In the first edition of This Month We AIed (#1), I described a case where I used AI to generate reports based on vessel-related logs downloaded from Kibana. Back then, the goal was to analyze over 20,000 trajectory records, extract insights such as problematic port pairs or frequent collision routes, and propose improvements.

As a follow-up during the hackathon, we expanded beyond the earlier approach of running AI-generated scripts on demand against manually downloaded log files. Instead, we integrated the same kind of analysis directly into the application. The result was a full-stack feature: the UI showing potential analysis, backed by a simple service for storing and retrieving metrics from the database.

It’s worth highlighting that this wasn’t just a quick demo, but the feature consisted of all the layers you would expect in a real application - a backend endpoint, database integration, working UI component, all covered with tests and written in a similar style as coding standards in our project.

While manual cleanup was still needed afterward (e.g., removing unnecessary test cases, renaming variables, etc.), the result stood as a working example of a complete, full-stack feature generated with AI.

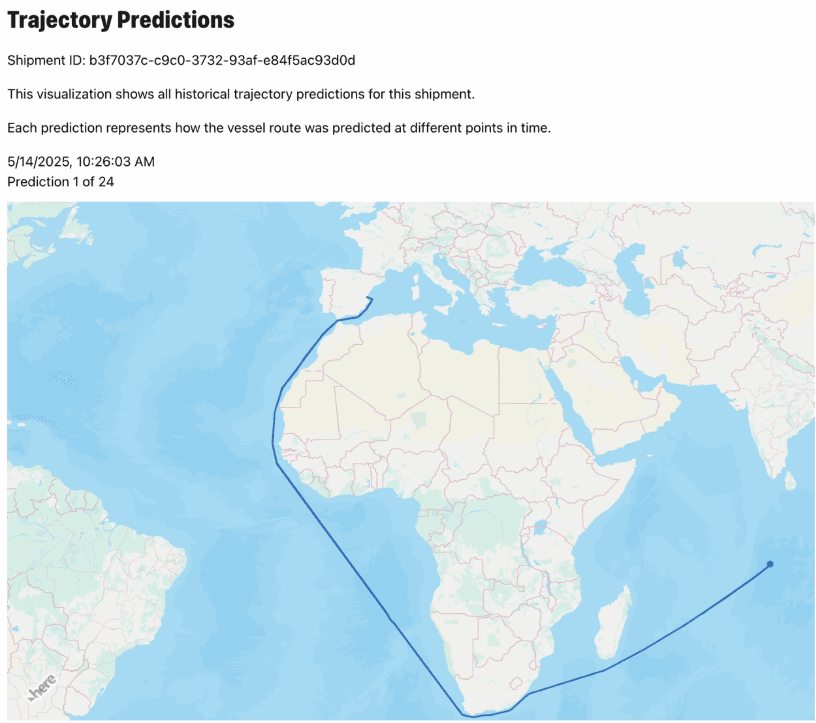

Visualization of existing feature

Another area we explored was extending the UI with a visualization built directly on top of existing data. A good example is the implementation of a view that replays historical trajectory records as an animation, showing how they change over time. The solution reused stored entries as time-series inputs, integrated them into the UI, and rendered them in a clear, interactive way. This transformed raw data into a visual preview that was much easier to interpret at a glance.

Exploring deployment alternatives

Another experiment we attempted was creating a Helm chart based on an existing Terraform configuration. The idea was simple: if AI could handle the conversion effectively, we could move quicker to adopting ArgoCD for future deployments. Given the limited time, the scope was narrowed to a single service, with verification done by deploying the chart and comparing the results against the Terraform deployment. The expectation was that both should provide the same service without differences, offering a ready-to-switch Helm implementation that could be tested in a single environment.

The outcome was promising, but not without drawbacks. The generated chart still relied on Terraform for specific outputs, and secrets remained tied to Terraform. Despite these limitations, the experiment showed that AI-supported Helm migration could be within reach, and with further refinement, AI could accelerate the path toward a smoother GitOps setup.

Where it failed

Not every attempt worked out as planned. Some experiments revealed the current limitations of AI in our workflow, and a few ideas remained unfinished or were only partially useful. Still, each of them provided insights, prototypes, or practices that could guide future improvements.

UX refresh

One of our attempts was to improve the user experience of one of our internal UIs by giving it a cleaner, more user-friendly look while maintaining the business logic unchanged. In the refreshed version, the main functionality broke, and we were unable to stabilize it despite several attempts, while it looked only slightly better.

However, on the other hand, the attempt worked well as a prototype, as it gave us a sense of what a redesigned interface could look like. Just as importantly, this experiment introduced us to using a Playwright MCP server for UI testing during AI development. That practice turned out to be a great takeaway, and it will continue to support our work on frontends going forward.

Business-logic oriented service README

We also attempted to create a README for one of our most complex services, not because of its technical complexity, but due to the substantial amount of business logic it contains.

The idea was to provide a concise entry point that would help new developers quickly understand what the service does, while also serving as a handy reference for more seasoned team members.

The outcome is hard to measure in terms of real usefulness. Even after a few iterations, the draft stayed too general and didn’t quite work as a helpful guide.

Summary

In just 4 hours, with only a few participants, our AI hackathon delivered far more than we expected - all inside a mature system that has been running for years.

We were especially happy with the smaller improvements - things that usually stay at the bottom of the backlog - but added up to meaningful gains. At the same time, more advanced topics were helpful and promising, while tasks that were heavy in complex business logic showed that this is still a challenging area for AI to tackle.

Altogether, the hackathon ensured that we continue leveraging AI tools to support our day-to-day work.

If you’re interested in more stories like this, check out our This Month We AIed. It's our monthly series where we openly share successes, failures, and lessons learned using AI tools.

Reviewed by: Piotr Janczyk