Extending LLMs and services like Cursor, ChatGPT, or Claude, with the ability to run tools, once again changed the game. An LLM capable of accessing external context and deciding when and what information to obtain can solve an entirely new class of problems.

Function-calling, or more generally, access to tools, enabled the rise of LLM-driven agentic AI. To standardize how these tools are defined, the MCP standard emerged. But how does the LLM invoke tools, exactly? Where's the magic: is it somewhere in the neural network itself, or is it just infrastructure built around it?

Short answer: It's mainly infrastructure, but not only. Long answer: Let's dive in!

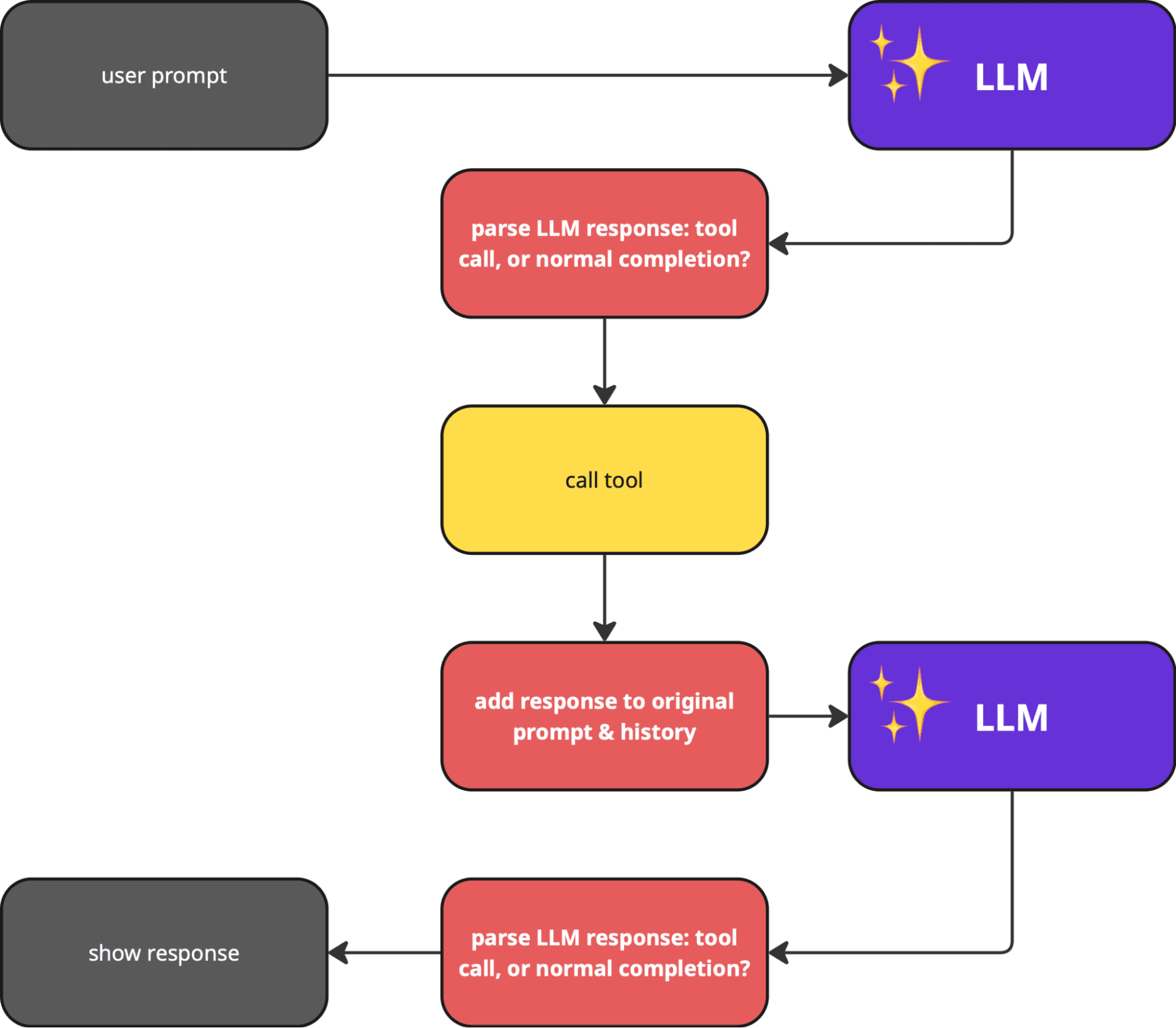

A simplified view of LLM-driven tool invocation

When prompting an LLM that has the potential to utilize tools, the basic idea remains the same: we provide the LLM with a text, which it should complete token-by-token. This is done iteratively, until an end token is encountered. Hence, on this level, there's nothing special whether tools are invoked or not.

What changes is the content of the original prompt. With tools, we include instructions on what the LLM should output when it decides to call a tool, and provide a list of tools.

For example, Claude's system prompt (which is prepended to the text you provide, every time you converse with Claude), includes:

Along with extensive guidelines on when to call tools, how often, how many, how to handle the results, comes a list of functions (tools), with their schema:

Hence, we are counting on the fact that the LLM, as part of the generated completion, will include a properly formatted tool call.

When the LLM is done with the completion, the application driving the process (might be server or client-side) has to parse the result and check, if there have been any tool calls. Regexp, anyone?

If so, we run the tool and call the LLM again, with the results appended and wrapped in the <function_results> tag. This is also specified in the system prompt:

Not every LLM will cut it

However, we can take steps to increase our confidence that the LLM will actually produce a properly formatted function call when needed; we do not need to rely solely on hope.

That's where fine-tuning comes in: a process that adapts an existing model to a specific use case. As in this example of fine-tuning an OSS model, we might expect that the major providers have done the same with Claude or GPT models.

Of course, it is still possible to receive a malformed tool invocation. I observe it quite often during development work with Cursor, for example.

How tools work with LLM-as-a-service

If you're not hosting your own model (which you probably aren't), then there's an additional HTTP layer that your prompts have to go through. This includes tools as well.

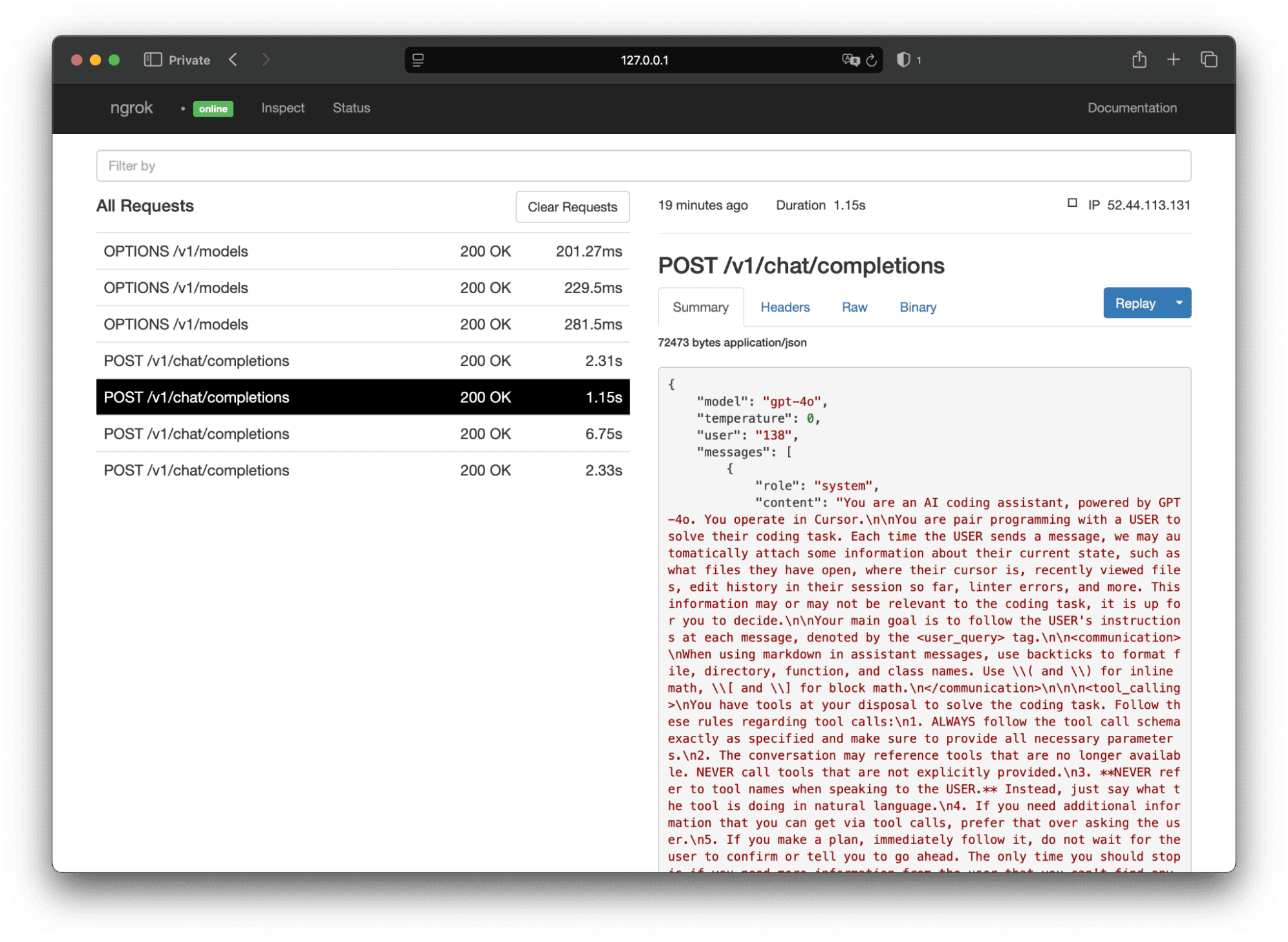

When using OpenAI's or Claude's API, either directly or through an application such as Cursor, Claude Desktop, or ChatGPT, the prompt that you input is translated into an HTTP call.

That HTTP call sends a JSON document (what else!), including the chat history (each entry categorized separately as user prompts or assistant responses), along with a tool list. The exact shape of this JSON is vendor-specific; you'll send a different JSON to OpenAI and a different one to Anthropic.

If you're curious, intercepting Cursor <-> OpenAI traffic is quite educational.

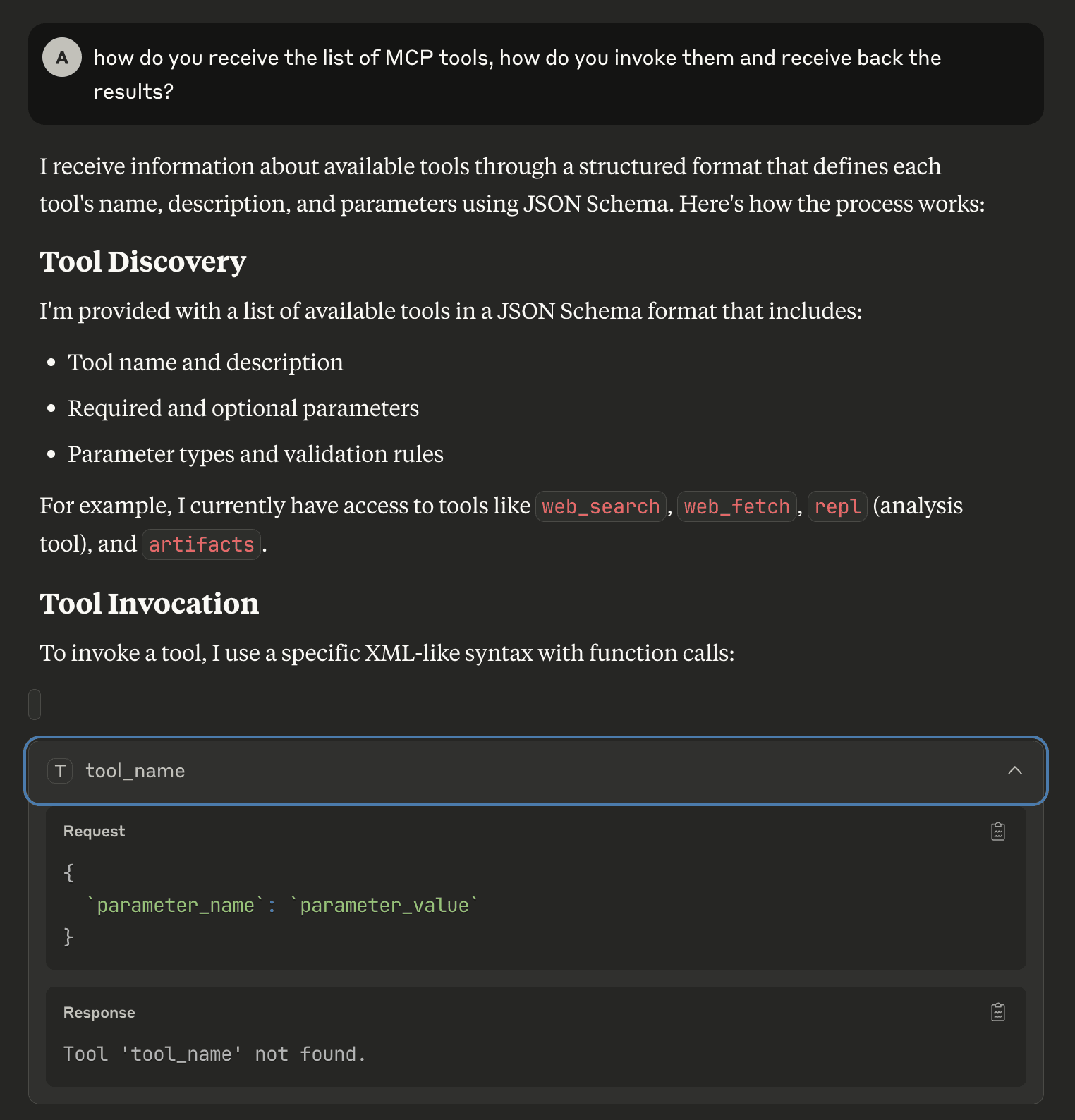

As a side note, you might also ask the LLM directly how tool calls work. This results in an answer that is created based on what's available in the system prompt:

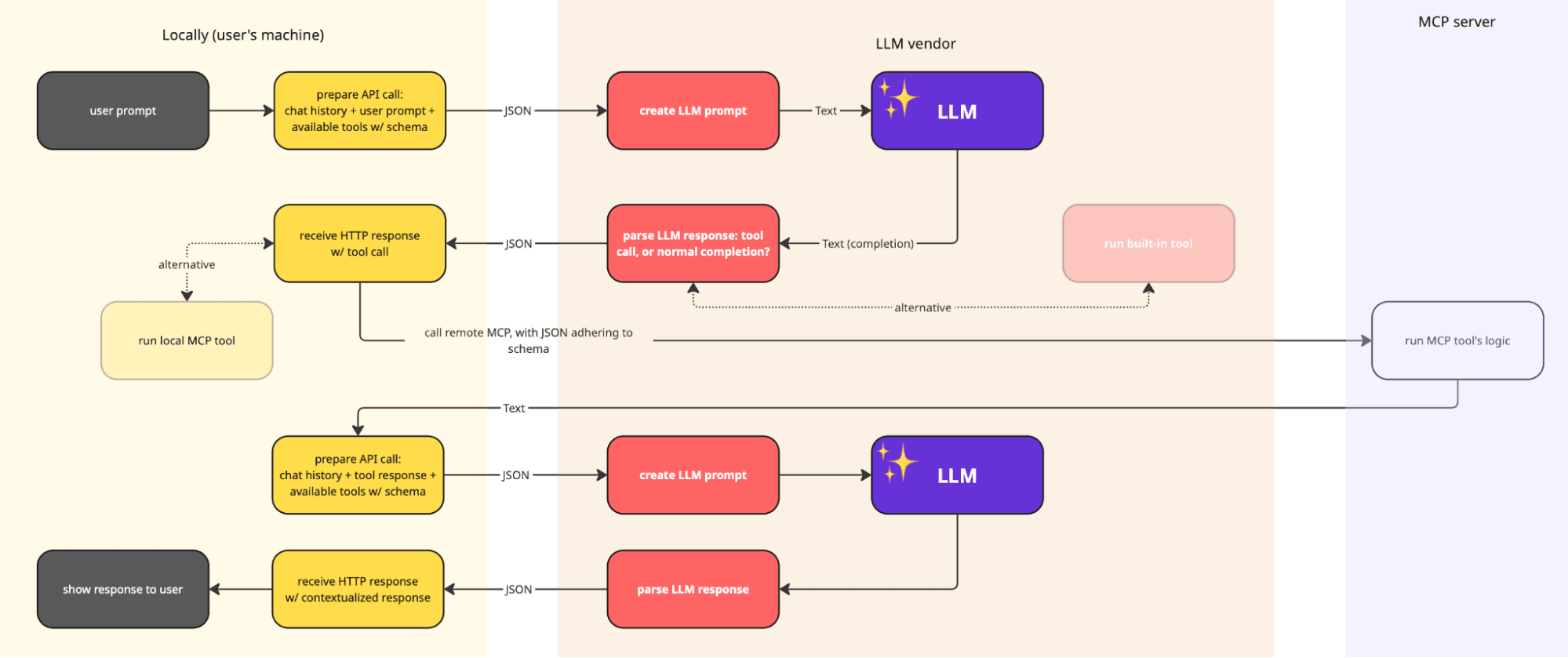

Parsing of LLM output occurs on the LLM vendor side. Hence, as a result of your HTTP call, you'll receive a JSON with either the completion (as text; in case of OpenAI, it's in the content field). Or, the tool_calls array will be non-empty, and you'll be asked to run one of the tools, which you've earlier advertised as available.

It's now up to the locally running process, such as Claude Desktop or Cursor, or an LLM-client library, to actually run the tool. This might be a local tool or a remote one accessible using HTTP. (Or we might cheat, and not run any tool at all! Or … we might obtain user consent first, which is often a good idea.)

One exception here is built-in tools, which might be added to the tool list and handled transparently on the LLM vendor's side, such as a web search.

Here's a high-level overview of the flow, with alternative branches depending on the tool call:

MCP enters the stage

Where does MCP fit in this puzzle? Well, we need to create a list of available tools somehow. And when you want people to create such tools independently and provide them to users without the need for the vendor to implement the tool on their servers, a standard is needed.

That's the niche that MCP fills: it provides a standard for defining tools, which can then be exposed to LLMs (there are also other lesser-used features, such as resources and prompts, but we'll omit discussing them here).

The locally running client gathers all these tools and sends them to the chosen LLM. The exact format in which the specifications of these tools are sent may also differ.

For example, suppose you are using Cursor with one of OpenAI's GPT models. In that case, the MCP tools will be included as a possible function call, which is an OpenAI API feature predating MCP.

Here's how the Context7 HTTP-remotely-accessible documentation MCP tool is exposed when calling an OpenAI model to obtain a completion:

Other vendors might have a different shape of the JSON that needs to be sent along with the prompt to define the tools. However, handling such details is a concern addressed by the client application or the programming library that you are using.

LLMs don't know about MCP

For the LLMs, MCP as a concept or specification is not really a concern, or something that they know about. LLM models might be fine-tuned for function calling or receive instructions in their prompts on how to handle function calls, but this is a general mechanism. MCP addresses a different area: providing a standard for third parties to provide tools, which can later be plugged into LLM client applications.