I’ve always claimed there’s no better way to learn anything than by building something yourself… and the second-best way is reviewing someone else’s code 😁.

At VirtusLab, we recently had a bit of a sobering reflection. Our “collection” of GitHub-starred projects had grown to a massive size, without bringing much real value to us or the wider community. So, we decided to change our approach: to add a bit of structure and start acting as chroniclers of these open-source gems. By doing this, we can understand them better - and discover the ones where we can actually contribute.

From now on, every Wednesday we’ll pick one trending repo from the previous week and give it some attention: a tutorial, an article, or a code review - learning directly from its authors. We’ll focus on what catches our eye: it could be a tool, a library, or anything the community has decided is worth publishing. Only one rule applies: it has to be new or relatively unknown. No rehashing the obvious projects that get thousands of stars after a big release (because honestly, who wants to hear another breakdown of Angular’s architecture).

With that out of the way, let’s dive into our first project: deepagents.

Shallow Loops vs Deep Thinking

I’ll start with a hot (or maybe more mildly warm) take – the future of advanced AI systems may depend less on breakthroughs in the models themselves, and more on innovations in the architectures that orchestrate them.

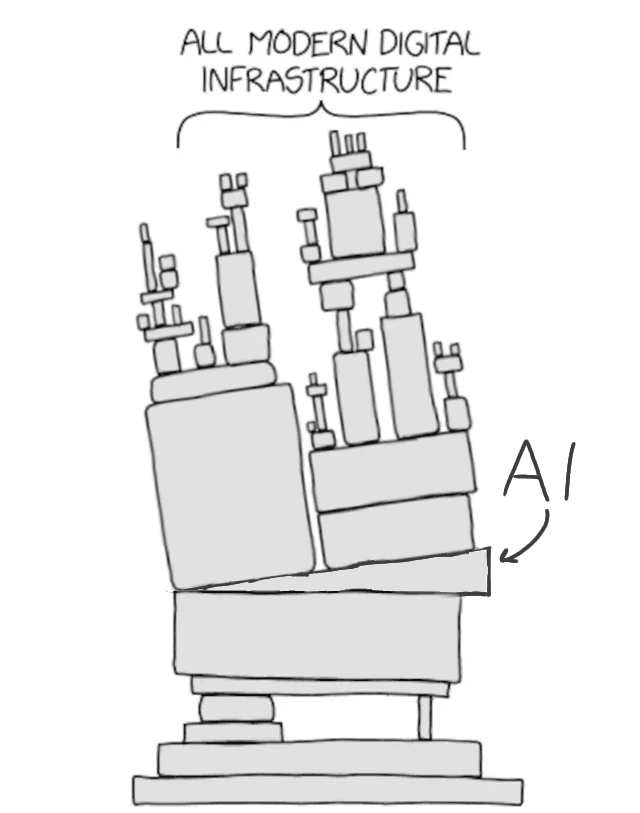

Agents based on large language models promise automation of complex tasks. However, the dominant architecture that has emerged as the standard is deceptively simple: an LLM running in a loop, calling tools to interact with the outside world. This approach, known as the ReAct (Reason-Act) loop, while effective in simple scenarios, quickly reveals its limitations. It leads to the creation of agents that can be described as “shallow” – they struggle to maintain long-term strategy, plan multi-step operations, and manage complex context, which prevents them from carrying out truly sophisticated tasks, such as in-depth research or software development. I suspect that few readers would take on building their own alternative to Claude Code, yet every day I see new “agent” apps mushrooming everywhere.

That’s why I decided to look into the deepagents project, which is a direct response to this problem. It is not an attempt to create a revolutionary new algorithm or a more powerful LLM. Its strength and elegance lie elsewhere – in smart compositional engineering.

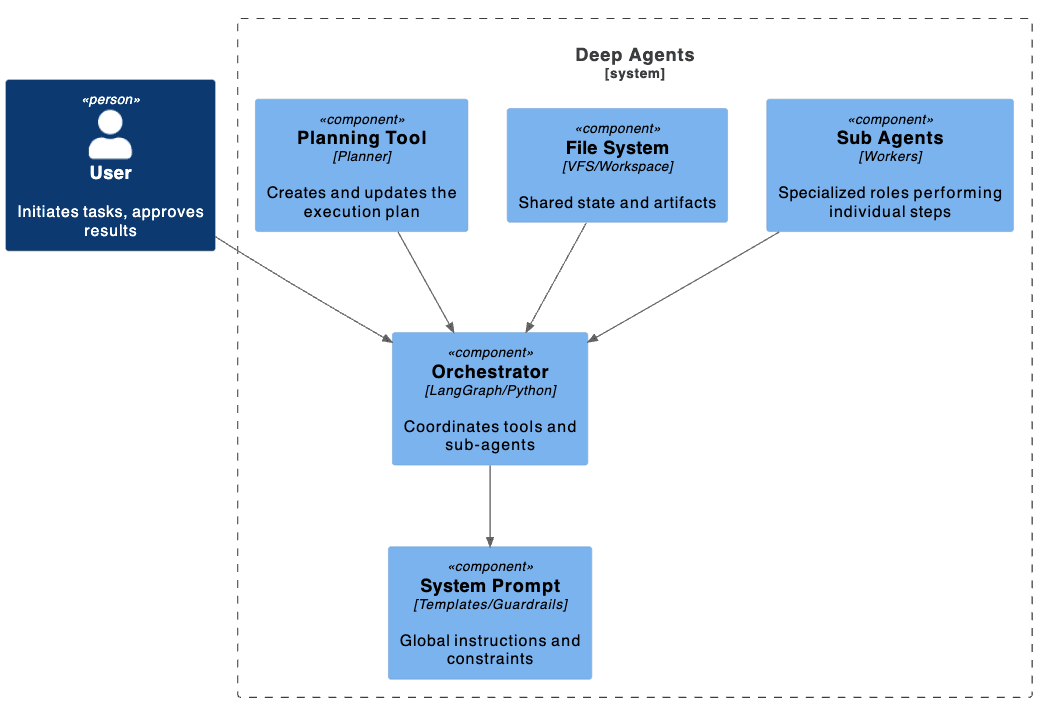

Analyzing this project showed me an interesting trend in AI development: the shift from a model-centric AI paradigm to a system-centric AI approach. deepagents does not introduce a new, specialized model; by default, it uses off-the-shelf LLMs such as Claude 3 Sonnet. Its way of solving complex problems comes from carefully designed components external to the model: an extensive system prompt, planning tools, a sub-agent architecture, and a virtual file system.

The Four Pillars of the deepagents Architecture

deepagents is the result of analyzing existing advanced agent systems such as Claude Code and Manus, which (more or less, but with each passing day more more 😁) successfully handle complex tasks. Harrison Chase identified four common features that account for their effectiveness.

Prime Directive: System Prompt Engineering

The foundation on which every “deep agent” rests is an exceptionally detailed system prompt. This is not a simple one-sentence instruction, but a comprehensive “operations manual” that precisely defines the agent’s identity, operating principles, and constraints. In deepagents, this element is treated with the utmost seriousness, which is reflected in the built-in prompt, heavily inspired by the reconstructed prompts of the “Claude Code” system.

The analysis of this prompt’s structure reveals that it is much more than just an instruction. It contains detailed guidelines for the response format, enforces a methodical approach to problem-solving (e.g., through “step-by-step” reasoning), and most importantly, includes thorough API documentation for the built-in tools, sub-agents, and virtual file system. The agent is instructed not only on what to do, but also on how to use the resources available to it.

The prompt is modular and consists of several sections:

- General instructions: Define the agent’s role and overarching goal.

- Tool descriptions: Provide detailed documentation for each available tool, its parameters, and the expected call format.

- Thinking and action format: Impose a response structure, e.g., requiring the agent to first present its plan within <thinking> tags, and only then call a tool within <tool_code> tags.

- Custom instructions: A space where the user can inject their own task-specific directives.

The system prompt in deepagents serves as a versioned specification for agent behavior. Standard agents often fail because their instructions are too general, leaving too much room for interpretation by the model. deepagents counters this by treating the prompt like a precise technical specification. It even includes usage examples (few-shot examples).

If you think about it, in this sense, prompt engineering becomes a form of programming, where the language is precise natural language, and the interpreter is the LLM. The precision and level of detail in this “code” directly translate into the reliability, predictability, and ultimate effectiveness of the entire system.

Planning as a Context Anchor: The No-Op Tool Pattern

One of the most subtle yet fascinating patterns implemented in deepagents is the use of a planning tool which, from a technical standpoint, is a no-op tool. This tool, named TodoWrite and inspired by a similar mechanism in Claude Code, in fact performs no action in the external world - it does not send emails, modify files, or query APIs. Its sole purpose is to force the LLM to externalize its action plan and record it in the context window.

Standard LLMs have limited ability for long-term “in-head” planning. The ReAct loop, focusing on a single “reason–act” step at a time, encourages tactical rather than strategic thinking. After a few iterations, the original goal may blur, and the agent begins to “drift,” losing context. This approach solves the problem elegantly. By invoking the no-op tool, the agent records its plan (e.g., as a to-do list), which becomes part of the conversation history and thus a persistent element of context that the model can reference in subsequent steps (without needing to “re-extract” it from scratch). It acts as a so-called context anchor, stabilizing the agent’s long-term strategy and continually reminding it of the overarching goal and next steps.

This pattern is an example of using the tool-calling mechanism to manipulate the agent’s internal cognitive state, rather than merely to interact with the external world. Instead of relying on the model’s fragile internal memory to keep track of its plan, the plan is “externalized” and becomes part of the observable environment (the conversation history). In this way, the LLM effectively “cheats” its own limitations, creating an external memory for its strategy, which supports the execution of complex, multi-step tasks.

By understanding the limitations of LLM architecture, the project provides a mechanism to work around them.

As task complexity increases, a single agent encounters a scalability barrier for two key reasons, both tied to the limited context window of a language model. First, its context window fills up with “information noise” - results of previous actions that are no longer relevant - making it harder for the model to locate critical data and leading to goal drift. Second, the large number of available tools complicates the decision-making process, increasing the risk of selecting a suboptimal tool. deepagents addresses this problem through its built-in ability to create and delegate tasks to specialized sub-agents.

This architectural pattern brings two fundamental benefits, directly borrowed from proven principles of classical software engineering.

In case anyone was still wondering whether such principles would be needed in the “AI era.”

First, there is Context Quarantine. When the main agent encounters a subproblem (e.g., “conduct a detailed competitor research”), instead of solving it itself and “polluting” its main context window with dozens of search results and analyses, it can spawn a sub-agent dedicated to this task. The sub-agent operates in its own isolated context, focusing solely on the assigned goal. Once finished, it returns only the condensed, final result to the main agent. This keeps the primary context clean and focused on the strategic level of the task.

Second, there is specialization and modularization.

deepagents allows defining custom sub-agents, each with its own unique system prompt and specialized set of tools. In this way, one can create a “team of experts”: one sub-agent for research (with access to search tools), another for code writing and refactoring (with access to the file system and linter), and yet another for data analysis (with access to analytics libraries). The main agent then acts as a project manager, decomposing the problem and delegating tasks to the right specialists.

The implementation of this pattern is simple and declarative. A developer defines a list of sub-agents as a list of dictionaries, where each dictionary describes a sub-agent by its name, description (for the main agent), prompt, and optionally a list of tools. The following code snippet illustrates this concept:

The sub-agent architecture in deepagents is therefore a direct translation of the good old SOLID’s Single Responsibility Principle into the domain of agent systems.

The Single Responsibility Principle's Worst Nightmare

Virtual File System

The last of the four pillars is a mechanism that allows the agent to persistently save and read information that does not fit into the context window or must be shared between different steps or different agents. deepagents implements this as a virtual file system. The key architectural decision here is that this is not an interaction with the computer’s physical file system. Instead, it is a virtual structure implemented as a dictionary (dict) within the LangGraph state object.

This mechanism serves two key roles. First, it acts as a short-term working memory (“scratchpad”) for a single agent. The agent can write notes, intermediate results, code fragments, or long texts into “files,” so it can return to them later without having to keep them in the active context window.

Second, it functions as a medium for asynchronous collaboration between the main agent and its sub-agents. The main agent can write input data for a sub-agent into a file, and after completing the task, the sub-agent can write its result into another file.

To enable interaction with this virtual space, deepagents provides a set of built-in tools that mimic standard system commands:

- ls: Lists the “files” in the virtual file system.

- read_file: Reads the contents of a “file.”

- write_file: Creates a new “file” or overwrites an existing one.

- edit_file: Modifies the contents of an existing “file.”

The implementation of the file system as part of the managed graph state, rather than as an interaction with an external, uncontrolled resource, is a brilliantly simple solution that ensures statelessness and scalability of agents. Since the entire state of the agent — including its “file system” — is explicitly managed by LangGraph, it is possible to run multiple instances of the same agent in parallel on the same machine without the risk of write conflicts or concurrency issues. Each agent invocation is fully hermetic, which in theory should simplify deployment (ahem, serverless), testing, and scaling of systems based on deepagents.

The user can easily initialize an agent with a predefined file system state and read its final state after the work is completed:

And under the hood - LangGraph!

But understanding the four pillars of deepagents - the ones “borrowed” from Claude - is only half the story.

To fully appreciate the elegance of this project, we need to drop down a level of abstraction and look at its foundation: the LangGraph library. This is what enables reliable and controlled orchestration of the patterns described in the previous chapter.

Why LangGraph and not LangChain?

From LangChain to LangGraph

For those who remember the early days of the LLM craze, LangChain revolutionized how we build LLM applications by introducing the concept of Chains—sequences of language model calls and tool invocations. Chains are well-suited for modeling processes that can be represented as directed acyclic graphs (DAGs), where the flow of information is largely linear.

However, the nature of an agent’s work is fundamentally different: it is cyclical. What distinguishes an agent from a mere “LLM application” is that it repeatedly executes a loop: reasoning → choosing an action → executing the action → observing the result → returning to reasoning with new information.

Early attempts to implement this cyclical logic with LangChain’s agent mechanisms (and trust me, there were such attempts) ran into trouble. The control logic was often hidden inside complex prompts, and developers had limited visibility into the execution flow - making debugging and reliability assurance difficult.

This is why the project’s authors created LangGraph: a new framework designed from the ground up to model cyclical, stateful graphs, perfectly capturing the agent’s loop. Instead of burying the control logic, LangGraph forces developers to explicitly define it as nodes and edges, providing full transparency and control.

State Management in StateGraph

The central concept in LangGraph is the StateGraph, a graph whose nodes operate on a shared state object. In deepagents, this state—AgentState—is far richer than just a conversation history. It is a comprehensive object aggregating all the key information the agent needs to function:

- Messages: A list of all interactions (human, AI, and tool outputs)

- File system: A dictionary representing the agent’s virtual file system, as mentioned in the previous section

- Task list: The agent’s plan, broken down into pending, in-progress, and completed tasks

Each node in the graph, representing a step in the agent’s logic, receives the current state, can read it, and then returns a modified version. LangGraph ensures this state is consistently passed between nodes.

What’s more, LangGraph provides built-in persistence mechanisms, called checkpointers, which can automatically save the graph’s state after each step. This allows the agent to resume from the last saved point in case of an error—a feature absolutely crucial for long-running, reliable systems. Restarting the entire agentic flow from scratch every time an error occurs would not only be costly but also highly inefficient in terms of time.

Flow Logic: Conditional Nodes and Edges

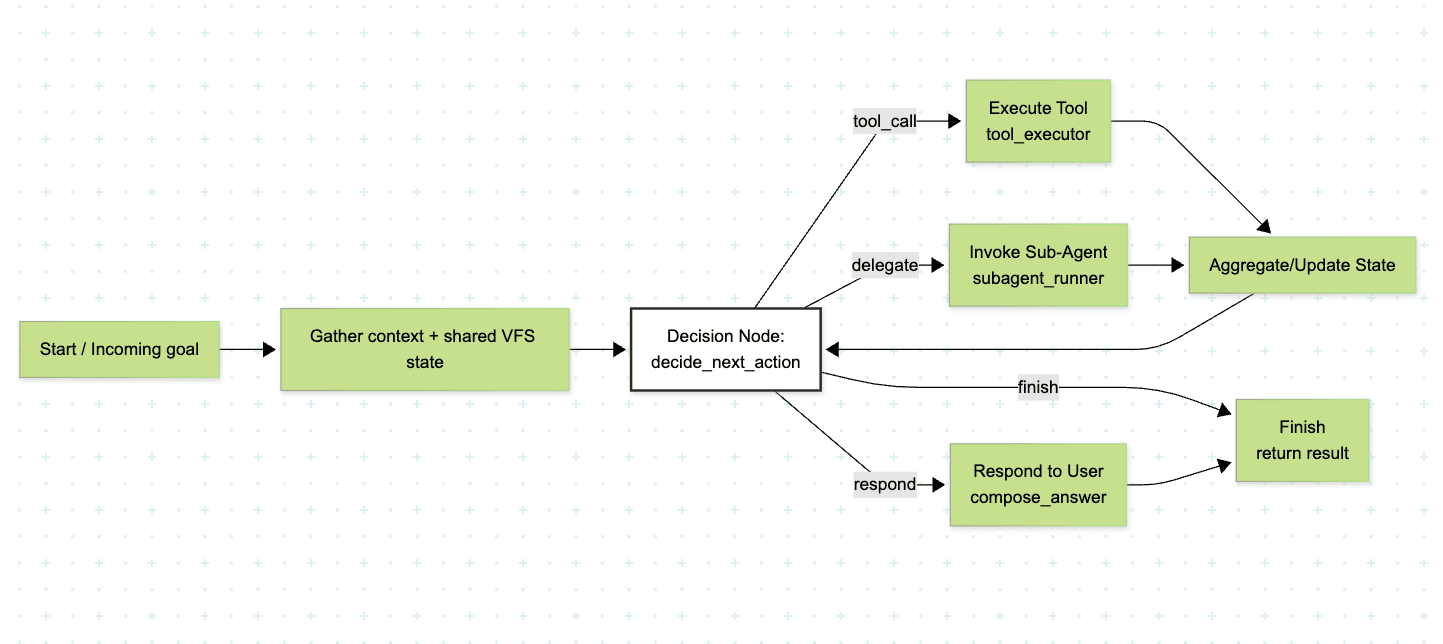

In LangGraph, control flow is defined explicitly through a graph composed of nodes and edges.

- Nodes are typically simple Python functions that perform a single “unit of work” - for example, invoking an LLM to make a decision, executing a tool chosen by the LLM, or aggregating results.

- Edges connect these nodes, defining the possible execution paths.

The real power of LangGraph lies in conditional edges. After a decision node executes (e.g., one that queried an LLM), you can define logic that routes execution to different nodes depending on the result - whether the LLM decided to call a tool, respond directly to the user, or delegate work to a sub-agent. This mechanism enables the explicit implementation of the agent’s cyclical loop and gives developers precise control over how the agent behaves in various situations.

In deepagents, this flow logic determines whether the next step should be a tool call, a sub-agent invocation, or finishing the work and returning an answer.

This is why deepagents + LangGraph target scenarios where control, transparency, and reliability are paramount. While frameworks like CrewAI (which we currently use) enable faster prototyping with higher-level abstractions, and AutoGen offers unmatched flexibility for experimenting with dynamic multi-agent interactions, LangGraph equips engineers to build robust, production-grade systems where every step is explicitly defined and fully observable.

Lessons Learned - What can we learn from deepagents?

I started this piece with the thought that reviewing someone else’s code is one of the best learning exercises. The same holds true for the deepagents architecture, which “distills” certain elegant, reusable solutions from Claude Code and delivers a handful of interesting techniques.

Composition over Invention

The biggest lesson from deepagents is probably this: instead of trying to build a single monolithic “superintelligent” model that can do everything, Harrison Chase demonstrates how to compose simpler, well-defined, and understandable components into a system whose emergent behavior is far richer and more powerful than the sum of its parts.

In the end, deepagents is just a thin layer on top of LangGraph—a precise prompt, a planning utility, modular sub-agents, and a virtual file system… and that’s it. None of these pieces on their own is revolutionary. But when carefully combined and orchestrated via LangGraph, they form an architecture capable of deep reasoning. The whole thing is only a few hundred lines of code (excluding the prompt)—in fact, less than this very article that describes it. And it’s exactly that small size that made me want to read and understand it in under an hour—it all just fit into my “local cache” 😁.

At the same time, the project includes a few clever techniques. For example, the create_deep_agent function works like a template: it takes a configuration (list of tools, custom instructions, definitions of sub-agents, and the chosen LLM model) and, on that basis, produces and returns a fully functional, compiled agent graph instance.

I’m genuinely curious how much the authors’ approach really helps prevent context pollution. On one hand, there’s Cognitect’s stance, warning against spawning sub-agents because of complexity and drift. On the other hand, we’re seeing research pointing to context degradation and issues when agents are given access to too many tools at once. The truth is, there’s no settled playbook yet—this space is still being invented in real time. And honestly? That’s part of the charm. It’s… fascinating.

What excites me most, though, are the project’s future plans. The most important item on the roadmap is Human-in-the-Loop support - for example, to approve a critical operation or correct a wrong agent decision. LangGraph itself was designed with this kind of oversight mechanism in mind, making it easier to weave in checks and balances. From my own experience with agents, this capability isn’t just “nice to have” - it’s essential for building trust and for enabling an iterative rollout of agent maturity levels. It allows teams to gradually experiment with how much autonomy to grant the system, instead of jumping straight from 0 to 1. After all, who would want to release a new capability fully autonomous on day one, without intermediate steps or user onboarding?

One final interesting observation. The deepagents project takes on an even deeper meaning when viewed through the lens of Harrison Chase’s broader vision: Ambient Agents. Unlike traditional chatbots, which react to direct user commands, ambient agents are meant to operate continuously in the background, autonomously responding to streams of events (e.g., a new email, a database update, or a monitoring alert).

When you think about it… such a vision places huge demands on agent architecture. An ambient agent must be able to handle complex, long-running tasks without constant supervision. It must be capable of planning, managing its state over time, decomposing problems, and reliably interacting with different systems.

In this light, deepagents can be seen as both intriguing research and a working prototype of the architecture needed to build future “ambient agents.” It’s a key step on the path from simple, reactive assistants to truly autonomous systems that can become integral parts of our digital workflows. It shows that the key to overcoming the “shallowness” of standard agent loops lies in the composition of four powerful architectural patterns: precise prompt engineering, externalized planning, hierarchical task decomposition, and state management via a virtual workspace. Each of these pillars is a form of Context Engineering, and their combination through the solid, stateful LangGraph engine creates an architecture that is observable, scalable, and easier to debug — at least in theory.

And, honestly, it’s cool to see in a relatively “compressed” codebase how the individual patterns from Claude Code can be implemented. That alone makes it worth taking a look.

Definitely a well-deserved GitHub star from me 😉